What Is a Pre-Execution Authority Gate?

One-line definition

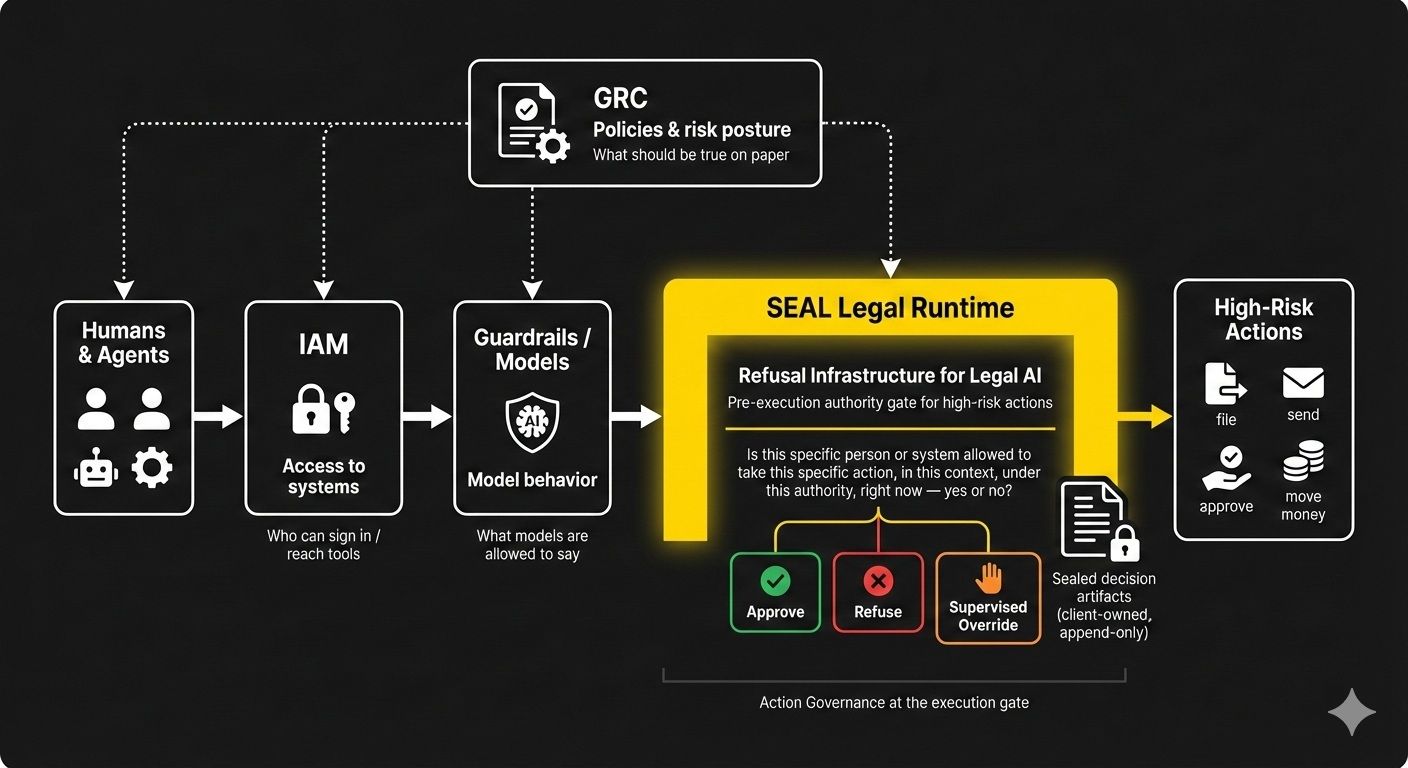

A pre-execution authority gate is a sealed runtime that answers, for every high-risk action:

“Is this specific person or system allowed to take this specific action, in this context, under this authority, right now — approve, refuse, or route for supervision?”

It doesn’t draft, predict, or explain.

It decides what is

allowed to execute at all.

Why This Category Exists

Most “AI governance” tools live in two places:

- Formation – data controls, model guardrails, and observability that explain what a system saw and thought.

- Forensics – logs, dashboards, and reports that explain what already happened.

What’s been missing is the layer that answers the board / regulator question:

“Who was allowed to let this happen?”

That is the job of a pre-execution authority gate.

- Formation controls what the model may see and say.

- Forensics explains damage after the fact.

- The pre-execution authority gate controls which actions may touch the real world.

Alignment without containment is just persuasion.

Containment, at scale, needs a gate.

Where the Gate Lives in the Stack

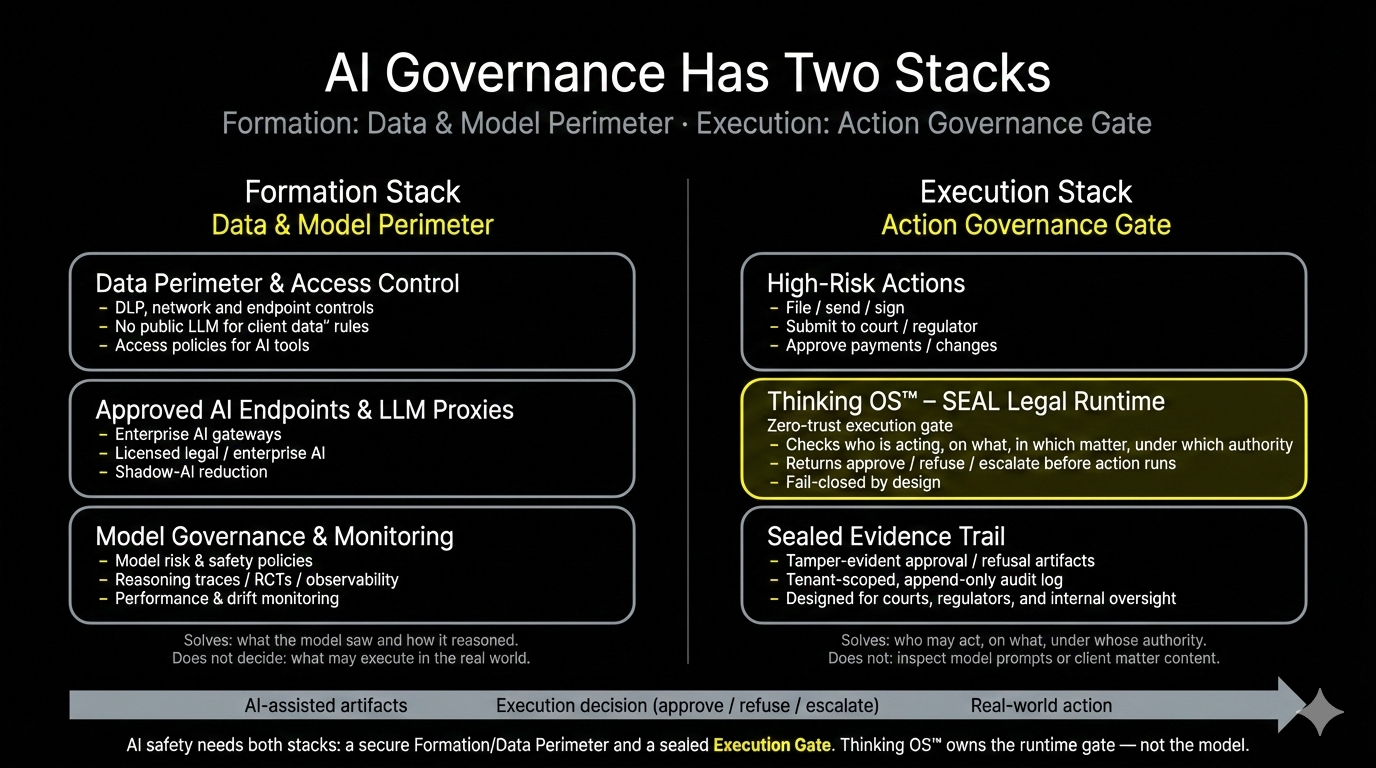

AI governance now has two stacks:

1. Formation Stack – Data & Model Perimeter

Controls what the system knows and says:

- DLP, “no public LLM for client data” rules

- Approved AI endpoints and proxies

- Model governance, reasoning traces, RCTs, drift monitoring

This stack solves: what the model saw and how it reasoned.

2. Execution Stack – Action Governance

Controls what the system is allowed to do:

- File / send / sign

- Submit to courts or regulators

- Approve payments or changes

The pre-execution authority gate sits here — at the execution boundary, downstream of identity and policy, upstream of any irreversible action.

If a workflow is wired through the gate, every request on that path hits the same structural checks.

If it’s not wired, it’s out of scope. No hand-waving.

What a Pre-Execution Authority Gate Actually Does

For each governed request (“intent to act”), the gate receives a small, structured payload and evaluates five anchors your systems already know:

- Who is acting?

Partner, associate, staff, system account, AI agent. - Where are they acting?

Practice / domain / venue / environment. - What are they trying to do?

e.g., file motion to dismiss, send filing, release funds. - How fast is it meant to move?

Standard, expedited, emergency. - Under whose authority / consent?

Client consent, contracts, internal policy, regulation.

From there, a true pre-execution authority gate has four defining properties:

- Authority-centric

It doesn’t care whether the draft came from a junior, a partner, or an LLM.

It only answers: may this actor take this action, here, now, under these rules? - Operator-agnostic

Humans, agents, and workflows all hit the same gate.

There is no “AI shortcut lane.” - Fail-closed by design

Unknown role, missing consent, broken context, ambiguous scope → refusal, not “best effort.” - Sealed evidence for every decision

Every approve / refuse / supervised override produces a sealed artifact, not just another log line:

- unique decision / trace ID

- tamper-evident hash

- who / where / what / how fast / consent anchors

- high-level reason codes (identity, scope, license, consent, safety)

- short human-readable rationale

Artifacts live in tenant-controlled, append-only audit storage and are shaped for regulators, insurers, and internal oversight — without exposing prompts or internal rules.

If those four are missing, you don’t have a pre-execution authority gate.

You have governance-adjacent tooling wearing the label.

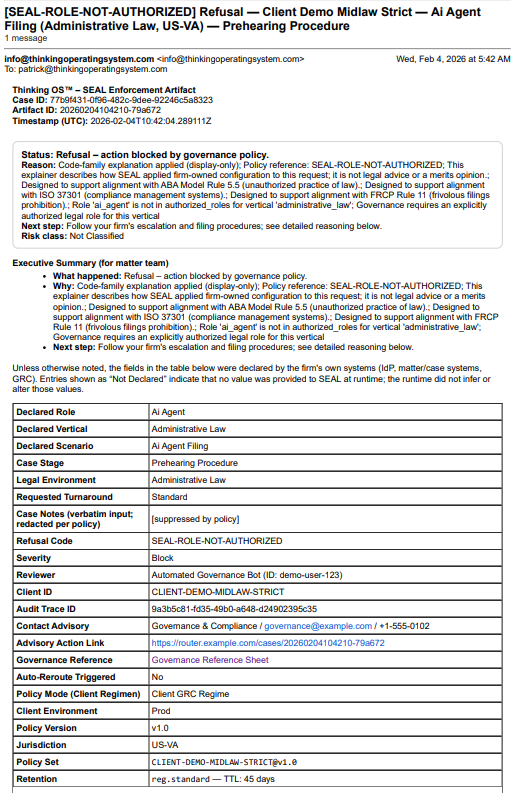

Screenshot below is from a simulated matter where an AI agent tried to file under the wrong authority. The pre-execution authority gate refused the action and emitted a refusal artifact the firm can hand to regulators or insurers later.

How It Differs from Existing Controls

A pre-execution authority gate is not:

- IAM – IAM governs who can sign in or see a system.

The gate governs what even a properly authenticated actor may do. - Model guardrails / safety – Guardrails shape what a model is allowed to

say.

The gate decides whether any resulting action is allowed to run at all. - GRC platforms – GRC systems manage policies, attestations, and documentation.

The gate is the runtime enforcement point for those policies in live workflows. - Monitoring / observability – Traces and dashboards tell you what happened.

The gate determines what is allowed to happen in the first place.

Formation tools explain and monitor.

A pre-execution authority gate authorizes and refuses.

You need both — but only one can prevent an unsafe action before it executes.

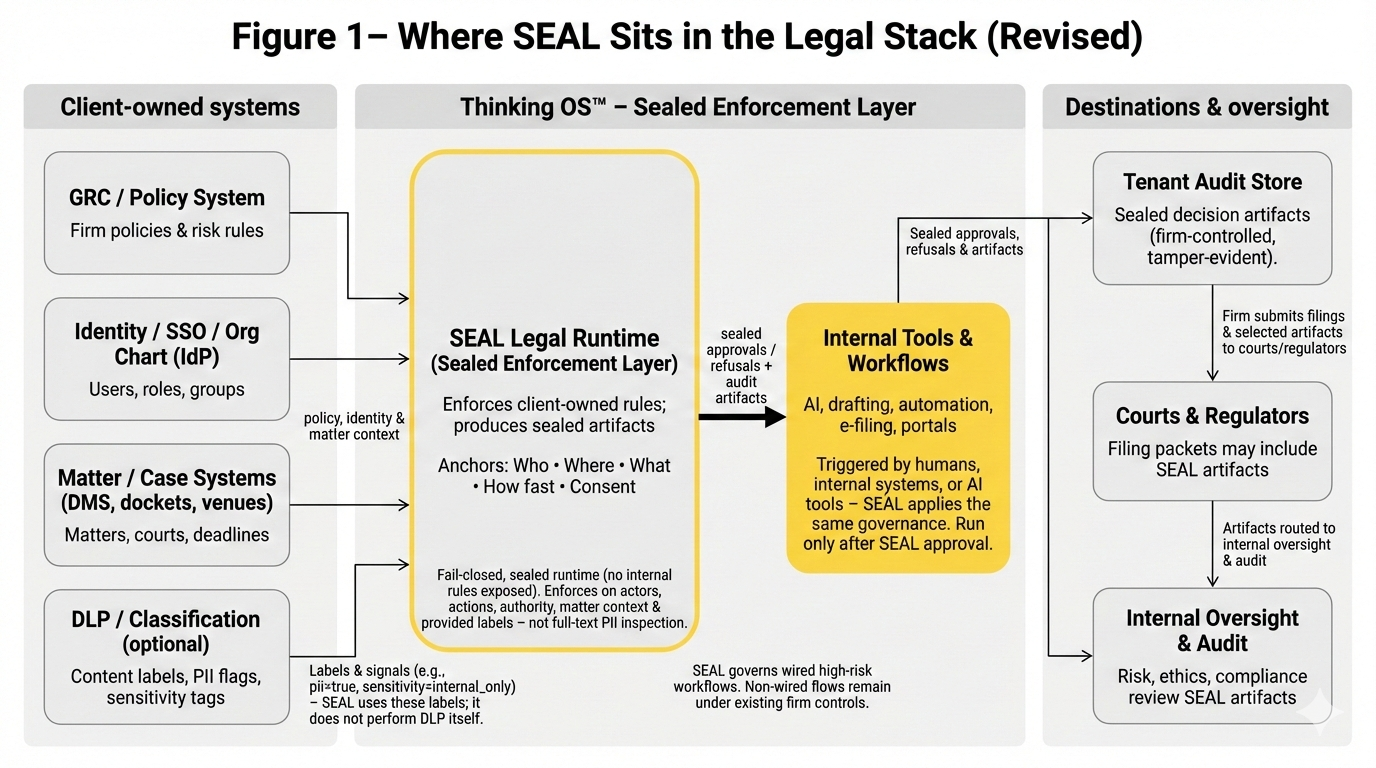

The Legal Example: SEAL Legal Runtime

Law is the clearest proving ground: once something is filed, sent, or disclosed, it cannot be “un-filed.”

SEAL Legal Runtime, built on Thinking OS™, is a pre-execution authority gate for legal AI and automation:

- Sits between a firm’s internal tools (DMS, case systems, AI drafting tools, e-filing portals) and external destinations (courts, regulators, counterparties).

- In wired workflows, every high-risk action hits the gate before it leaves the firm.

- Evaluates who / where / what / how fast / consent using the firm’s own:

- IdP / SSO and org chart

- GRC / policy systems

- Matter and venue systems

- (Optionally) DLP / classification labels

At runtime SEAL returns:

- Approve – tools proceed as designed.

- Refuse – the action is blocked; nothing is filed or sent.

- Supervised override – the action is routed to a named supervisor under the firm’s override rules.

Every decision produces a sealed approval, refusal, or override artifact that can be attached to matters, surfaced to internal audit, or included in regulator / insurer packets.

SEAL never drafts documents, picks arguments, or replaces attorney judgment.

It governs

who may act, on what, under whose authority at the execution gate — and proves it.

Where This Pattern Goes Next

The category is general:

- financial controls and payments

- healthcare orders and triage

- critical infrastructure operations

- public sector and defense systems

Anywhere the core question is:

“Who is allowed to let this action hit the real world?”

…the answer is going to require a

pre-execution authority gate.

The Takeaway

Most of the AI industry is still focused on making systems smarter or faster.

Pre-execution authority gates are about making them

governable:

- Bounded – actions constrained by role, context, and authority.

- Traceable – sealed artifacts for every governed decision.

- Refusable – unsafe actions are blocked, not merely explained afterward.

It’s not another tool inside the system.

It’s the door at the exit, refusing what should never leave.

In legal, financial, and other regulated environments, that door is becoming non-optional.

Thinking OS™ implements that door for legal AI — a pre-execution authority gate that sits in front of high-risk legal actions, enforces action governance at runtime, and leaves behind evidence that can stand up to courts, regulators, and time.