The Missing Fourth Layer of AI Governance: Who’s Allowed to Act at All

Action Governance — who may do what, under what authority, before the system is allowed to act.

For the last twenty years, we’ve been building governance for AI and automation in the wrong order.

We focused on data governance.

Then we layered on model governance.

All on top of long-standing security and access governance.

All of that matters.

But in the middle of the excitement, we skipped the one layer that actually decides whether an organization survives contact with AI in the real world:

Action Governance — who may do what, under what authority, before the system is allowed to act.

Every headline you’ve seen about “AI gone wrong” is just a symptom of that missing layer.

- We have rules for information.

- We have rules for models.

- We have rules for access.

We do not have rules for the moment of execution.

That’s the hard truth.

And it’s where the next decade of AI governance will be won or lost.

1. How We Got Here: Three Layers and a Blind Spot

The modern enterprise did what made sense at the time.

Data governance:

- What data do we have?

- Who owns it?

- Where does it live?

- How do we classify, retain, and protect it?

Model governance:

- How is the model trained?

- What data went into it?

- Does it drift?

- Is it biased?

- How do we monitor performance?

Security governance:

- Who can log in?

- What systems can they see?

- What’s exposed to the internet?

- Where are the firewalls, the IAM, the SOC?

Those three layers grew up in a world where systems were mostly deterministic:

If input = A, output = B. Every time. Predictably. Testably.

You could put a control behind the system and feel safe:

- If something looked wrong, you patched it.

- If a user misbehaved, you revoked access.

- If a report was off, you traced it back and fixed the data.

The assumption was always the same:

“We control the box. We can fix it after the fact.”

AI — especially generative, autonomous, and agentic AI — broke that assumption.

2. What AI Changed: Speed, Autonomy, and Distance From Oversight

In an AI-mediated environment, three things happen at once:

- Speed - Systems act in milliseconds. Governance thinks in meetings.

- Autonomy - Agents make decisions and trigger actions without a human in the loop at every step.

- Distance - The people accountable for outcomes are often far away from where the system takes action.

That’s how you end up with:

- A model that was approved for fraud analysis quietly denying credit.

- A chatbot turning a customer complaint into a legal promise.

- An AI assistant generating a court filing under the wrong attorney’s authority.

- A coding agent deleting a live database because a prompt “sounded” close enough.

None of these failures start with bad data or a malicious actor. They start with something much simpler:

The system was allowed to act without a preconditioned check on authority.

The question that should have been asked first — but wasn’t — is:

“Is this specific action, in this context, allowed to run at all?”

That is the question data governance, model governance, and security governance do not answer.

Which is why we need a fourth discipline.

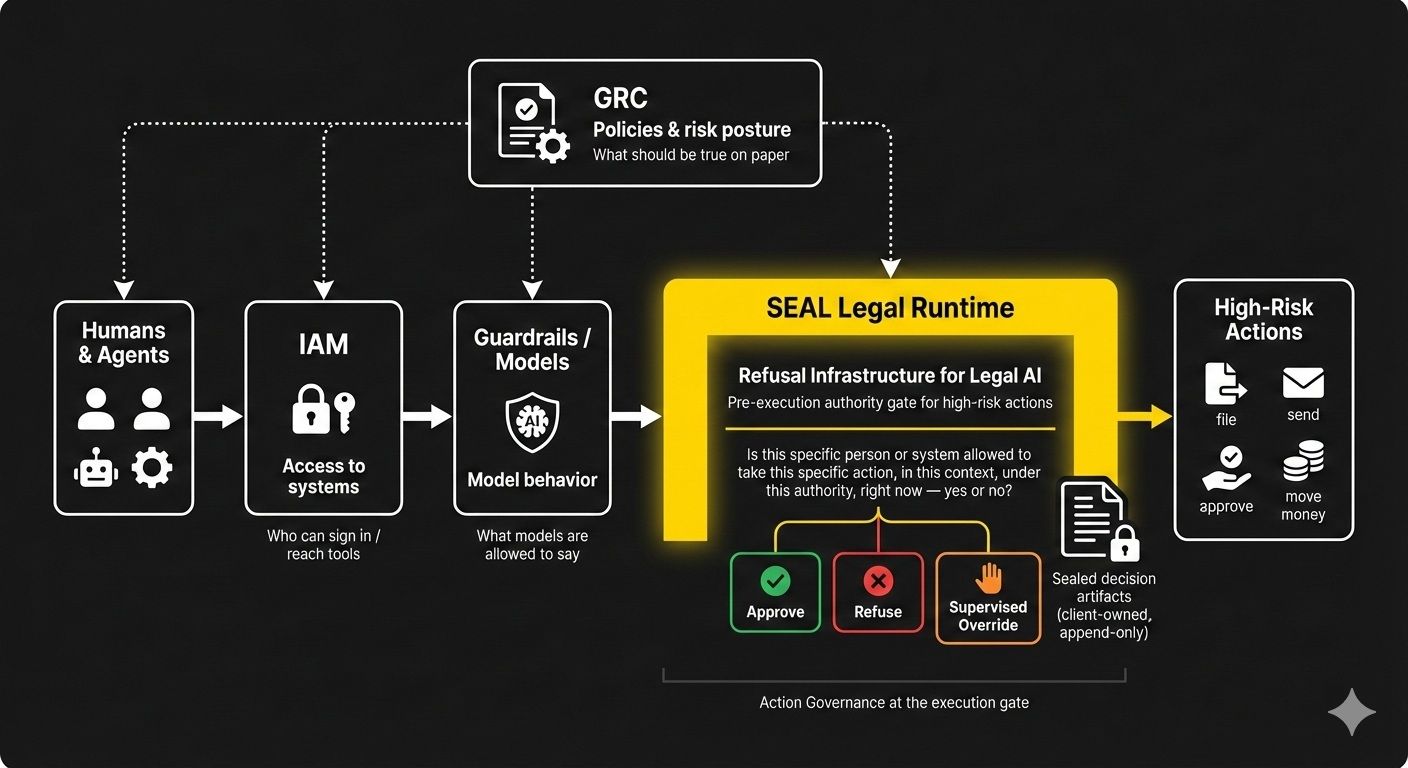

3. The Missing Layer: Action Governance

Let’s name it plainly:

Action Governance is the discipline of enforcing who may do what, on what, under what authority, before any system — human or AI — is allowed to act.

It is not:

- a dashboard

- a log sink

- an ethics policy

- an explainability report

- a model card

It is a gate.

A gate that can say, for every attempted action:

- Who is acting? (Identity)

- In what role? (Authority)

- On what object, matter, or domain? (Scope)

- In what situation? (Context, jurisdiction, urgency)

- Under what rules? (Policy, regulation, contract, ethics)

…and then do one of three things:

- Approve and record that approval.

- Refuse and record that refusal.

- Escalate and record that supervision.

Before anything happens, not after.

- Data governance asks: “Is this information appropriate?”

- Model governance asks: “Is this logic acceptable?”

- Security governance asks: “Is this person allowed in?”

Action governance asks a stricter question:

“Regardless of who they are, is this action allowed to exist?”

Until that question is enforced in the runtime, every other control is five steps too late.

4. Why the Old Stack Fails Under Real Pressure

Consider a simple but common scenario:

Example 1: Loan Decisions

- Data governance knows which fields are sensitive.

- Model governance has documented training data and fairness metrics.

- Security governance ensures only certain roles can access the interface.

Then, late on a Friday:

- A model originally approved for fraud scoring starts being used to auto-approve or deny credit.

- A front-end change quietly connects it to a decision workflow.

- Over the weekend, thousands of applications are denied.

On Monday, leaders discover:

- A disproportionate share of denials affected a protected group.

- No one remembers approving the model for credit decisions.

- No alert fired when the scope changed from “fraud signal” to “credit arbiter.”

The failure didn’t happen in data. It didn’t start in the model. It didn’t start in IAM.

It happened at the moment the system started acting in a role no one explicitly authorized.

That is an action governance failure.

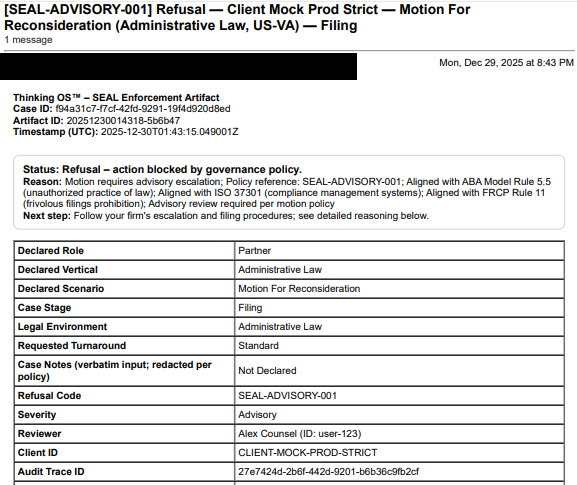

Example 2: Legal Filings

- A firm has AI tools that can draft motions, summarize case law, and prepare templates.

- Policies say “humans are always in the loop.”

- Security ensures only licensed attorneys can access certain tools.

Then:

- An associate uses AI to generate a filing for a jurisdiction they’re not licensed in.

- A partner, under time pressure, approves with a quick glance.

- The filing contains fabricated cases and misstates authority.

Data governance didn’t stop it. Model governance didn’t stop it. Security governance didn’t stop it.

What was missing?

A gate that said:

“This role is not authorized to file this type of motion, in this court, under this client’s matter, without specific supervision.”

That’s action governance.

Example 3: Coding Agents and Infrastructure

- A dev assistant can read code and execute commands.

- Security ensures only engineers can use it.

- Logs capture all shell commands and commits.

Then:

- A junior engineer asks the assistant to “clean up old files.”

- The agent misinterprets and deletes a live data directory.

- Backups exist, but business is offline for hours and data is partially lost.

The system obeyed the request. Nothing in the stack asked:

“Is this category of destructive action permitted for this user, in this environment, at this time?”

Again, an action governance gap.

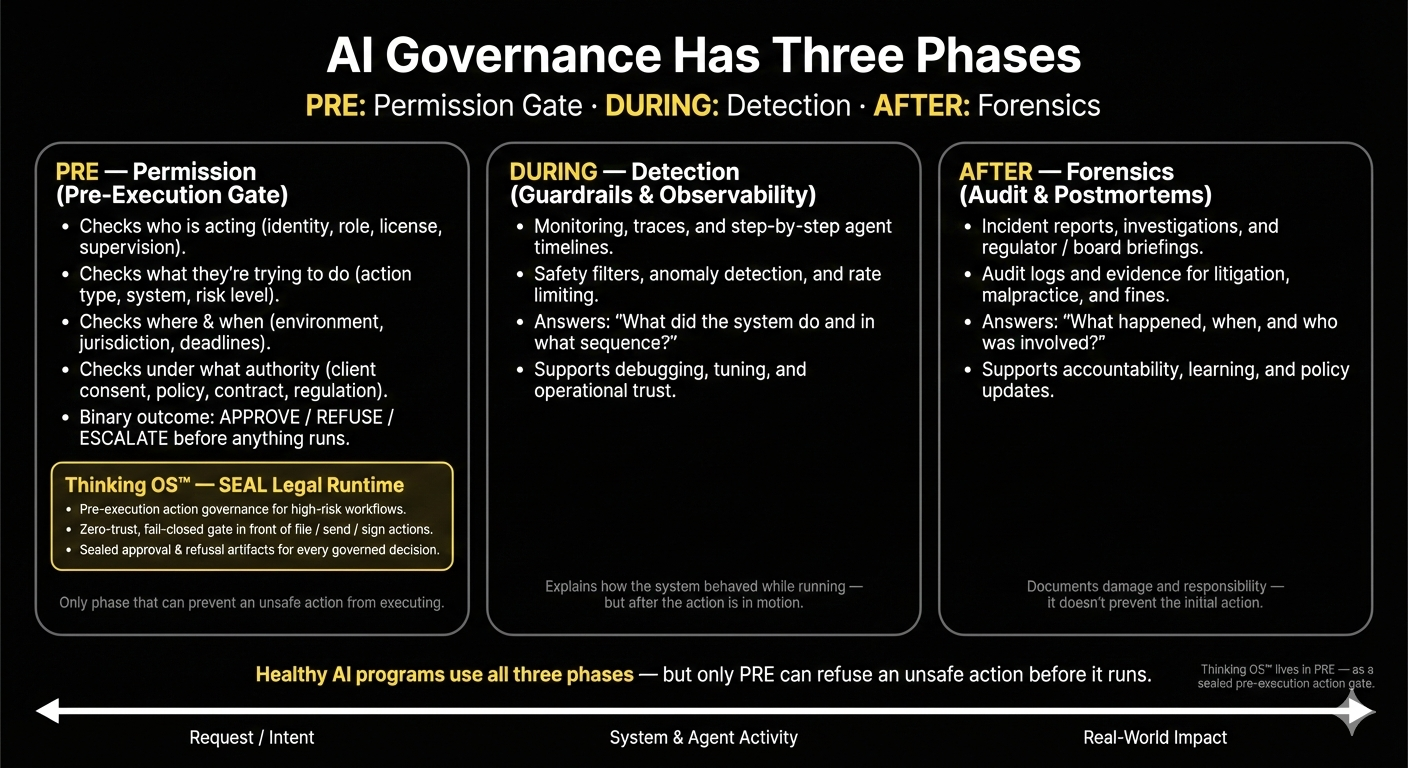

5. Why Insurers, Regulators, and Boards Now Care About This Layer

Insurers don’t need perfect models. They need

provable control.

Regulators don’t need every decision to be right. They need evidence that decisions were made under an enforceable standard.

Boards don’t need to understand every model weight. They need to know:

“When this system took action in our name, can we show who allowed it, which rules applied, and what refused to run?”

Right now, most organizations can’t.

They have logs. They have policies. They may even have beautiful dashboards.

What they lack is:

- A sealed record of authority at the moment of action.

- A structured way to prove that unauthorized actions could not execute.

Without that, “AI risk” remains a floating, unpriceable abstraction.

With it, AI risk becomes a

governable category.

That’s the difference between

“uninsurable” and “controlled”.

6. What Action Governance Must Include (A Practical Definition)

If this discipline is going to mean anything, it has to be concrete.

An action governance layer — whether homegrown or purchased — must be able to do at least this:

Sit upstream of execution

- Before model calls, workflows, filings, messages, or transactions.

- Not as an after-the-fact audit tool.

Bind actions to identities and roles

- Every attempted action knows who — person or system — is behind it.

- That identity is mapped to a role, license, or authority envelope.

Tie scope to context

- Jurisdiction, client, environment, sensitivity, urgency.

- “Allowed on test, refused on prod” is action governance, not just DevOps.

Enforce “allowed / refused / escalated” as first-class outcomes

- Refusal is not an error. It’s a valid, designed outcome.

- Escalation routes actions to supervision when conditions aren’t met.

Generate tamper-evident decision records

- Hash-anchored, timestamped, sealed artifacts that answer:

- Who tried to act?

- What did they try to do?

- What did the system decide?

- Under which rules?

Belong to the institution, not the vendor

- The governance perimeter must be controlled by the enterprise.

- Models, tools, and assistants can change. The action boundary must persist.

If a “governance solution” doesn’t do these things, it’s not action governance. It’s monitoring with better branding.

7. How This Reframes AI Strategy

Once you see the missing layer, certain questions stop being optional.

Instead of:

- “What can this model do for us?”

- You start with: “What actions are we willing to let any system take under our name?”

Instead of:

- “How fast can we automate this?”

- You ask: “What must always be refused or escalated, no matter how fast we move?”

Instead of:

- “Can we explain what the model did?”

- You ask: “Can we prove this action passed through an enforceable standard of authority?”

And instead of:

- “Who owns AI?”

- You ask: “Who owns the gate?”

Because in a world of autonomous systems,

the

pre-execution authority gate is where governance actually lives.

8. A Simple Way to Remember the Four Layers

If you want a shorthand for the next board meeting, use this:

- Data governance: What do we know?

- Model governance: How do we reason?

- Security governance: Who can see and touch the system?

- Action governance: What are we allowed to do?

The first three are about capability. The last one is about permission.

And permission is where law, ethics, and liability actually converge.

9. Ten Years From Now

Ten years from now, action governance will sound obvious.

Every regulated enterprise will have:

- A decision perimeter that sits above its AI stack.

- Sealed records of what was allowed, refused, or escalated.

- A clear separation between “where work happens” and “where authority lives.”

Insurers will ask for it in underwriting.

Regulators will reference it in guidance.

Courts will expect it in discovery.

And someone will ask:

“When did we start talking about action governance as its own discipline?”

I’m writing this so there’s a clear answer.

It started when we were finally honest that:

The world built three layers first — data, model, security — and forgot to govern the only point that actually counts: the moment of action.

We don’t need to fear AI. We need to refuse what should never be allowed to run and record what we decide to let through.

That’s action governance. And it’s the layer we can’t afford to ignore anymore.