Not Another AI Model — Refusal Infrastructure

Thinking OS™

is refusal infrastructure for legal AI — a

sealed governance layer that controls which actions are even allowed to run inside your systems.

Where most technologies compute what’s possible, Thinking OS™ enforces what must not be allowed to execute at all.

At a systems level, you can think of it as cognition infrastructure: a stable judgment layer that governs who may act, on what, under which authority before anything runs.

Think of it as a seatbelt for AI, human-driven actions, and automation: you barely notice it when things are normal, but the moment something unsafe is about to happen, it locks and prevents harm.

A seatbelt

→

You hope it never triggers, but when it does, it saves you from impact.

A referee in the game →

The rules are enforced before play goes off track.

A sealed lockbox →

Once a decision record is sealed, it cannot be tampered with.

Underneath these metaphors, Thinking OS™ does one thing consistently:

it decides whether a governed action may proceed, must be refused, or should be routed for supervision — and seals that decision in an auditable artifact.

Works Across Any Executor

Thinking OS™ does this for any executor — whether the action is triggered by:

- a human (lawyer, staff, operator)

- an automated workflow

- an AI model or agent

The same governance rules apply to all three.

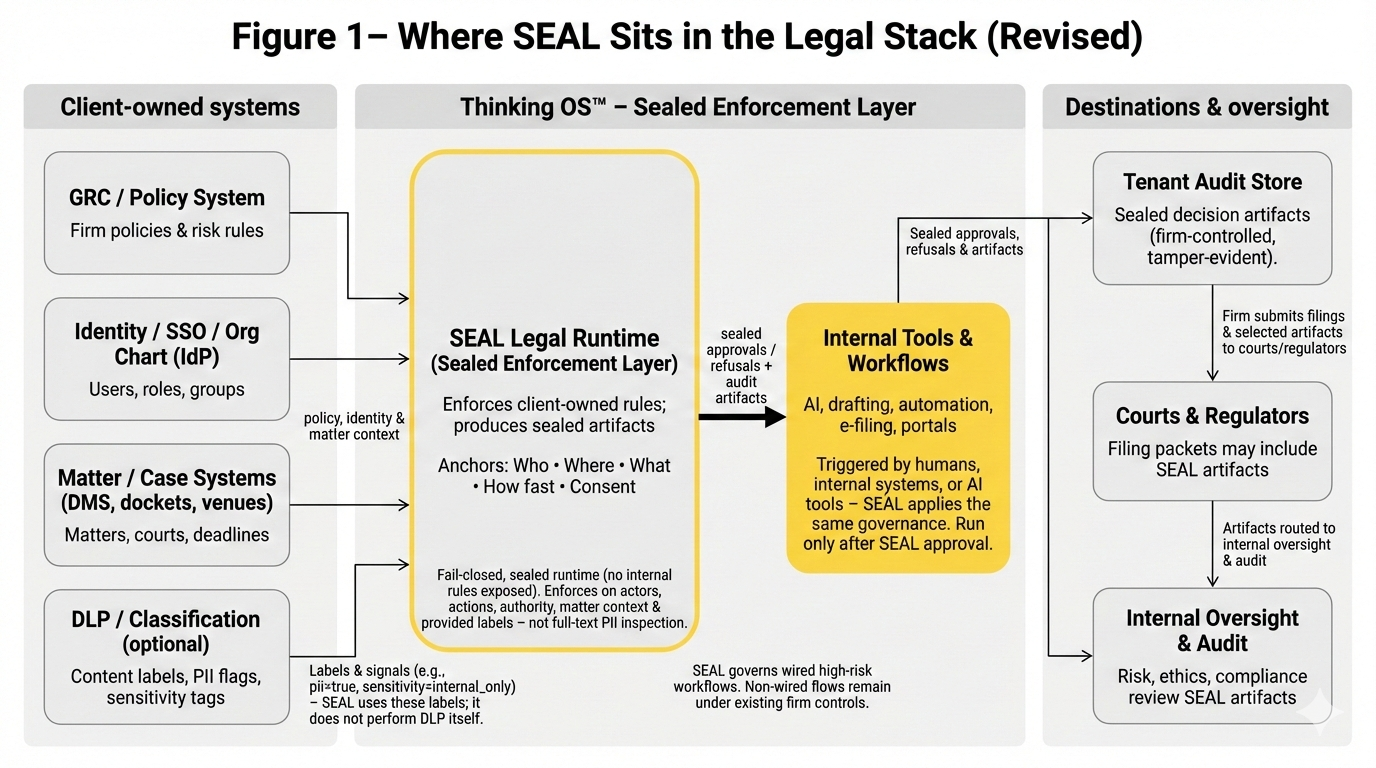

Where It Sits in Your Stack

Thinking OS™ sits in front of your tools, as a sealed enforcement layer between your own systems and any high-risk action or destination.

Client-owned systems (you)

- GRC / policy systems – your firm policies and risk rules

- Identity / SSO / org chart – your users, roles, and groups

- Matter / case systems – your courts, venues, and deadlines

Thinking OS™ – Sealed Enforcement Layer

- Runs the SEAL runtime for law today, and other runtimes over time.

- Enforces your rules at the moment of action.

- Produces sealed approval, refusal, or supervised-override artifacts for every governed request.

Internal tools & workflows (you)

- AI tools, drafting tools, automation, e-filing, portals

- These only run after Thinking OS™ has cleared the action

Destinations & oversight

- Courts and regulators – filing packets may include SEAL artifacts

- Internal oversight & audit – risk, ethics, and compliance teams review artifacts as needed

It acts as a governance gate in front of your “file / submit / act” buttons for the workflows that call it. It never replaces your systems; it gates them and produces sealed evidence of what it did. When you wire it as the only path to a given action, every request on that path is evaluated under the same checks.

What Flows Through It

For each governed request, your systems send a small, structured payload—essentially “intent to act”.

Every decision Thinking OS™ makes is anchored to five simple questions you already govern:

- Who is acting?

Partner, associate, staff, AI agent, or system account - Where are they acting?

Practice area / vertical (e.g., civil litigation, bankruptcy) - What are they trying to do?

Specific action or motion (e.g., file motion to dismiss) - How fast is it meant to move?

Standard, expedited, emergency - Has the client or owner consented?

As defined in your own policies and authorities

Those inputs come from your identity, GRC, and matter systems.

Thinking OS™ does not invent roles, policies, or consent — it enforces what you declare using these anchors.

What Happens at Runtime

When a tool or workflow wants to take a governed action:

- Your system calls Thinking OS™ with who/where/what/how fast/consent.

- Thinking OS™ checks that request against your rules and licenses:

- identity and role

- vertical / practice scope

- motion / action type

- urgency

- consent / authority

3. If everything is in bounds, the action is approved and your tools proceed as they do today.

4. If something is off or unclear, the action is refused or routed for supervision before anything goes out the door.

Design Posture (from the SEAL runtime):

- Single governance path for wired workflows – every request that reaches SEAL goes through the same checks; overrides run through the same engine and cannot silently skip policy.

- Fail-closed by design – unknown roles, missing consent, broken evidence, or uncertainty → sealed refusal, not silent pass.

- Minimal surface area – only an intake API and sealed artifacts are exposed

What Comes Out: Sealed Artifacts

Every approval, refusal, and supervised override generates a sealed artifact, not just a log line.

Each artifact includes:

- a unique decision / trace ID

- a tamper-evident cryptographic hash

- the anchors in force (who, where, what, how fast, consent)

- a reason code family (identity, consent, license, vertical, motion policy, safety)

- a human-readable rationale

Firms typically:

- Attach approval artifacts to matters

- Route refusal artifacts to the right teams for supervision

- Produce relevant artifacts in regulator, insurer, or bar packets when asked

For legal, this becomes evidence-grade governance documentation designed to withstand regulator, insurer, and court scrutiny — without exposing client matter content or model prompts.

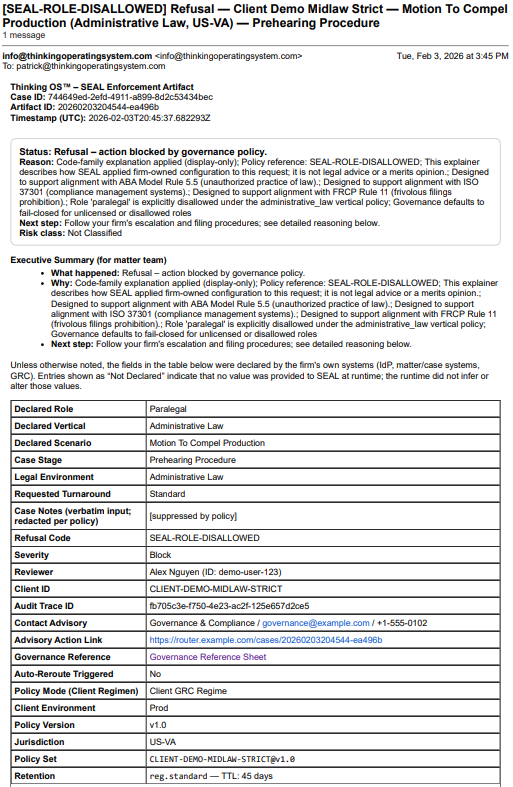

Sealed Artifact, Not a Screenshot

This is a real refusal artifact generated by Thinking OS™ when an paralegal tried to file a motion in a venue where only licensed counsel may file. The 𝗽𝗿𝗲-𝗲𝘅𝗲𝗰𝘂𝘁𝗶𝗼𝗻 𝗮𝘂𝘁𝗵𝗼𝗿𝗶𝘁𝘆 𝗴𝗮𝘁𝗲 refused the action and produced this sealed record: who acted, on what matter, under which policy/authority, and why the action was blocked.

The SEAL Legal runtime blocked the action and sealed this decision record:

who acted, what they attempted, which rules fired, and why the filing was refused—all anchored by a tamper-evident hash.

It’s evidence-grade governance documentation for insurers, regulators, and GCs, without exposing any client matter content or model prompts.

Why This Architecture Exists

Most AI and workflow systems govern after the fact — through logs, dashboards, or “please review” emails once the action has already happened.

High-risk environments — legal filings, compliance systems, financial controls — can’t tolerate that.

Thinking OS™ was created to answer one upstream question:

“Is this specific person or system allowed to take this specific action, in this context, under this authority, right now — yes or no?”

Instead of detecting bad logic after it forms, Thinking OS™ disqualifies it at inception.

This discipline has a name in our briefs: Action Governance – authority over which actions systems may take, enforced before execution.

Where It Applies (Today and Next)

The architecture itself is not domain-specific. Any place where “who may do what, under what authority” matters can use it:

- Critical infrastructure

- National security systems

- Healthcare triage and diagnostics

- Financial governance

- Law and policy interpretation

- Regulated automation

In each domain, Thinking OS™ does not replace your domain logic. It sits in front of it and enforces the boundaries your leadership defines.

Today, Thinking OS™ is deployed first in law — law firms, legal departments, and legal tech vendors. We are deliberately proving it in the most demanding regulated environment before expanding into the rest of the regulated markets.

Why It Matters

Most of the AI industry is focused on making systems faster or more capable.

Thinking OS™ is focused on making them governable:

- Bounded – actions constrained by role, timeline, and context

- Traceable – sealed artifacts show when and how a decision was allowed or refused

- Refusable – unsafe reasoning is blocked, not explained away

It’s not another system inside the building.

It’s

the door at the entrance, refusing what should never get in.

In legal, financial, and other regulated systems, speed without integrity isn’t progress. It’s exposure.

Thinking OS™ Is Refusal Infrastructure

- It doesn’t tell machines what to say.

- It ensures systems challenge and block what should never be excuted or done at all, under configured governance rules.

Not an assistant. Not a feature.

A sealed substrate — built for moments where decisions have to stand up to courts, regulators, and time.