Evidence & Sovereignty: Who Owns “NO” and the Proof?

Decision Sovereignty, Evidence Sovereignty,

and Where AI Governance Platforms Stop.

Most AI governance conversations sound like this:

- “Do we have an AI governance platform?”

- “Are we mapped to NIST / EU AI Act / ISO 42001?”

- “Do we have model guardrails and TRiSM in place?”

All important.

But in law, finance, healthcare, and other high-stakes domains, regulators and courts eventually ask a much more specific question:

“Who was allowed to let this happen, under what rules, and where is your record that proves it?”

That’s not a dashboard question.

That’s a

sovereignty question.

This article is about the two pieces most “AI governance” stories still skip:

- Decision sovereignty – who owns the rules that decide what may happen at all.

- Evidence sovereignty – who owns the artifacts that prove what you allowed or refused.

Everything else – platforms, policies, frameworks – only matters to the extent it supports those two.

1. The Missing Question: Who Owns “No”?

AI governance platforms promise a lot:

- Central inventories of models and use cases

- Risk registers and control libraries

- Policy mapping to EU AI Act, NIST, ISO, etc.

- Runtime monitoring and alerts

All useful.

But none of that automatically answers the question:

“When this AI-mediated action executed in the real world – file, send, approve, move money – who had the authority to say yes or no, and where is our proof?”

If your governance story ends with:

“The platform didn’t flag it” or

“We assume the vendor blocked unsafe actions”

…then your sovereignty has quietly moved to the platform.

You’re left renting:

- someone else’s rules, and

- someone else’s logs.

That’s not governance.

That’s outsourcing judgment.

2. Decision Sovereignty – Who Owns the Rules?

Decision sovereignty is the answer to:

“Whose rules does the system actually enforce at the moment of action?”

Not whose slide deck.

Not whose policy PDF.

Whose logic

actually runs when something tries to execute.

In an AI-mediated workflow, that means:

- Who defines what actions are even allowed to exist (file, send, approve, transfer, delete, prescribe, etc.)

- Who defines the conditions under which they may run:

- which roles,

- in which domains / matters / accounts / jurisdictions,

- under which client or regulatory authority,

- with what supervision or dual-control.

- Who can change those rules, and how those changes are authorized and recorded.

If a vendor-hosted AI governance platform is where:

- your org chart is modeled,

- your roles and authority envelopes live,

- your “allow / block / escalate” conditions are authored,

…then the platform effectively holds your decision sovereignty.

You still carry the liability, but the real power – the ability to say NO – sits in someone else’s product.

Quick decision sovereignty test

For any high-risk AI workflow (legal filings, payments, orders, approvals), ask:

1. Where are the authority rules actually encoded?

- In your own GRC / policy / identity systems?

- Or in a proprietary rules engine inside a vendor platform?

2. Who can change them?

- Only people under your org’s formal authority (GC, CISO, risk committee)?

- Or any admin with access to the vendor console?

3. If you unplug the platform tomorrow, what disappears?

- Just orchestration?

- Or the ability to prove who was allowed to do what?

If your ability to govern collapses when one vendor disappears, you don’t own your decisions.

You’ve

outsourced decision sovereignty.

3. Evidence Sovereignty – Who Owns the Artifacts?

Even if your rules are yours, there’s a second axis:

“Who owns the artifacts that prove what you allowed, refused, or escalated?”

Governance without evidence is just good intentions.

Most AI platforms today offer:

- Logs and traces in their dashboard

- Exportable telemetry

- Maybe some “explanations” of model behavior

That’s helpful for

debugging.

It’s often not enough for

regulators, courts, or insurers.

What serious environments need is not just “activity logs,” but decision artifacts:

- For each high-risk attempt to act (file / send / approve / move):

- Who (human / agent / service account) tried to act

- On what (matter, account, record, venue)

- Under which role / license / authority envelope

- What the governance layer decided:

- Approved

- Refused

- Supervised override

- Under which rule / policy state that decision was made

- When it happened

And then:

- Written to client-controlled, append-only audit storage, not just a SaaS database

- Protected under the client’s own retention, access, and jurisdiction rules

That is evidence sovereignty.

If those artifacts live only:

- inside the vendor’s environment,

- under the vendor’s retention policy,

- queryable only through their UI or API,

…then in a crisis you’re effectively asking a third party to co-author your story of what happened.

Logs vs. artifacts

A useful way to separate the two:

- Logs = raw telemetry: prompts, responses, tool calls, system events

- Decision artifacts = structured, tamper-evident records of governance outcomes (approve / refuse / supervised) at the execution gate

You need both.

But if you have to choose who owns what:

- Vendors can host telemetry.

- You must own the artifacts that prove what your system allowed under your seal.

4. Where AI Governance Platforms Stop

AI governance platforms are not the villain here.

They are solving real problems:

- Model and use-case inventory

- Policy and regulation mapping

- Risk scoring and workflow

- Control catalogs and attestations

- Monitoring, alerts, and investigations

In the five-layer control stack, they mostly live in:

- Data / formation governance – what the system is allowed to see and learn from

- Model / agent behavior controls – what it is allowed to say and attempt

- Post-execution monitoring & reconciliation – what happened, and how we review / remediate

Where they generally do not live (today) is:

4. Pre-execution authority gate (Commit layer) – who may let an action start at all

5. In-execution constraints – how far it may go while it’s running

That’s why the marketing often sounds stronger than the architecture.

A platform can say:

“We enforce AI policies and controls at runtime.”

…but if it doesn’t:

- sit in front of file / send / approve / move, and

- return approve / refuse / supervised for each attempt, and

- emit client-owned artifacts of each decision,

…it is not solving decision sovereignty or evidence sovereignty.

It’s solving visibility and consistency, which are valuable — but they are not the same as:

- owning your rules, and

- owning your proof.

5. The Runtime Gap: Where Sovereignty Actually Lives

In real systems, sovereignty shows up at exactly one place:

The moment before an irreversible action executes.

That’s the job of a pre-execution authority gate:

- It sits between AI tools / workflows and high-risk actions.

- It receives a minimal intent-to-act payload:

- who is acting

- on what matter / account / object

- what they’re trying to do

- how urgent / exposed it is

- under which authority / consent

- It evaluates that against your rules, identity, and policy sources of truth.

- It returns approve / refuse / supervised override.

- It emits a sealed artifact of that decision into your audit store.

Two crucial details:

1. The rules it enforces are yours.

- Derived from your GRC systems, ethics rules, risk appetite, and legal obligations.

- Not invented or opaque inside the vendor.

2. The artifacts it emits are yours.

- Written to your tenant-controlled audit store.

- Shaped for regulators, courts, and insurers – not just internal dashboards.

That’s where

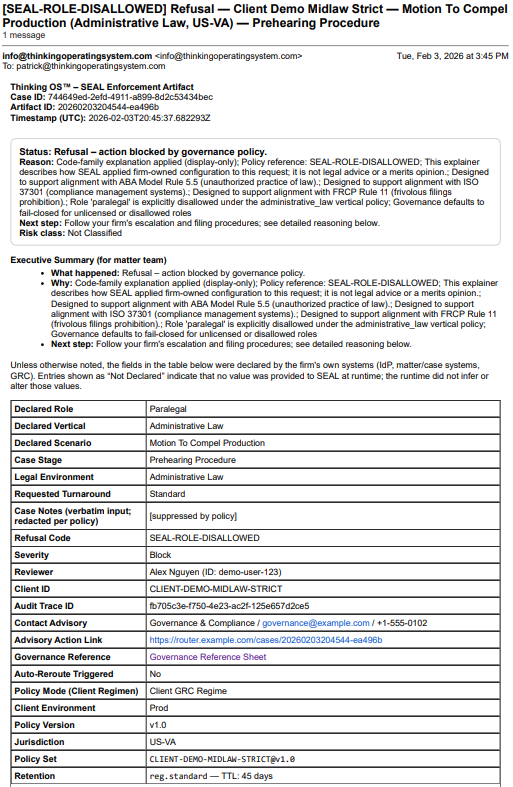

Thinking OS™ / SEAL Legal Runtime sits for law:

a sealed, refusal-first authority gate in front of file / send / approve / move in wired legal workflows, enforcing firm-owned rules and emitting firm-owned evidence.

AI governance platforms can

see that gate, orchestrate around it, and report on it.

They just

don’t replace it.

6. A Practical Checklist for GCs, CISOs, and Risk Leaders

If you want to turn “sovereignty” into something operational, use these questions in your next vendor or internal review.

A. Decision sovereignty – who owns the rules?

For each high-risk workflow:

1. Where are the authority rules authored and versioned?

- In our GRC / policy / identity systems?

- Or only inside the vendor’s platform?

2. Who approves changes to those rules?

- Do rule changes map to real governance processes (risk committee, GC, board mandates)?

- Is there a clear chain of attribution?

3.Can we swap platforms without losing our authority model?

- Or would we be rebuilding our decision logic from scratch?

If decision sovereignty is unclear, your system’s “NO” is not really yours.

B. Evidence sovereignty – who owns the proof?

1. Do we get a structured, sealed record of every approve / refuse / supervised decision at the execution gate?

2. Where do those artifacts live?

- In our tenant-controlled audit store under our retention rules?

- Or buried in a vendor database?

3. If the vendor shuts down, gets acquired, or changes their roadmap, what happens to our ability to prove what we allowed or blocked?

If evidence sovereignty is unclear, you have

AI activity, not

AI governance.

7. How AI Governance Platforms and Pre-Execution Authority Gates Fit Together

It’s tempting to frame this as “platforms vs. runtime gates.”

It isn’t.

You need both:

- AI governance platforms to:

- inventory systems,

- map obligations,

- coordinate risk and compliance,

- provide cross-cutting visibility.

- Pre-execution authority gates to:

- enforce who may act on what, under whose authority, before execution,

- and emit sealed, client-owned artifacts.

The clean separation is:

Platforms coordinate.

Gates decide and prove.

Clients own both the rules and the proof.

If a platform tries to do everything – own your policy, your runtime authority, and your evidence – you’ve just swapped one black box (the model) for another (the governance vendor).

8. The Takeaways You Can Steal

If you want a short, referenceable version of all this, use these:

- AI governance platforms can help you see.

Sovereignty is about who can say “no” – and prove it. - Decision sovereignty = whose rules actually run at the moment of action.

- Evidence sovereignty = who owns the artifacts that prove what you allowed, refused, or escalated.

- If your stack can’t answer:

- “Where does our pre-execution authority gate ‘NO’ live?” and

- “Where do our decision artifacts live?”

you don’t have governance. You have AI hope. - Platforms are valuable.

But in regulated work, “we trusted the platform” is not a control.

The standard of care is shifting toward this simple expectation:

Your enterprise owns the rules.

Your enterprise owns the artifacts.

Vendors provide infrastructure – not judgment.

That’s the line Thinking OS™ is built around:

Refusal infrastructure, pre-execution authority gates, and sealed evidence – so “NO” and the proof of it always belong to you.