AI Governance Has Two Stacks: Data Perimeter vs. Pre-Execution Gate

Why Thinking OS™ Owns the Runtime Layer (and Not Shadow AI)

In a recent back-and-forth with a security architect, we landed on a simple frame that finally clicked for both sides:

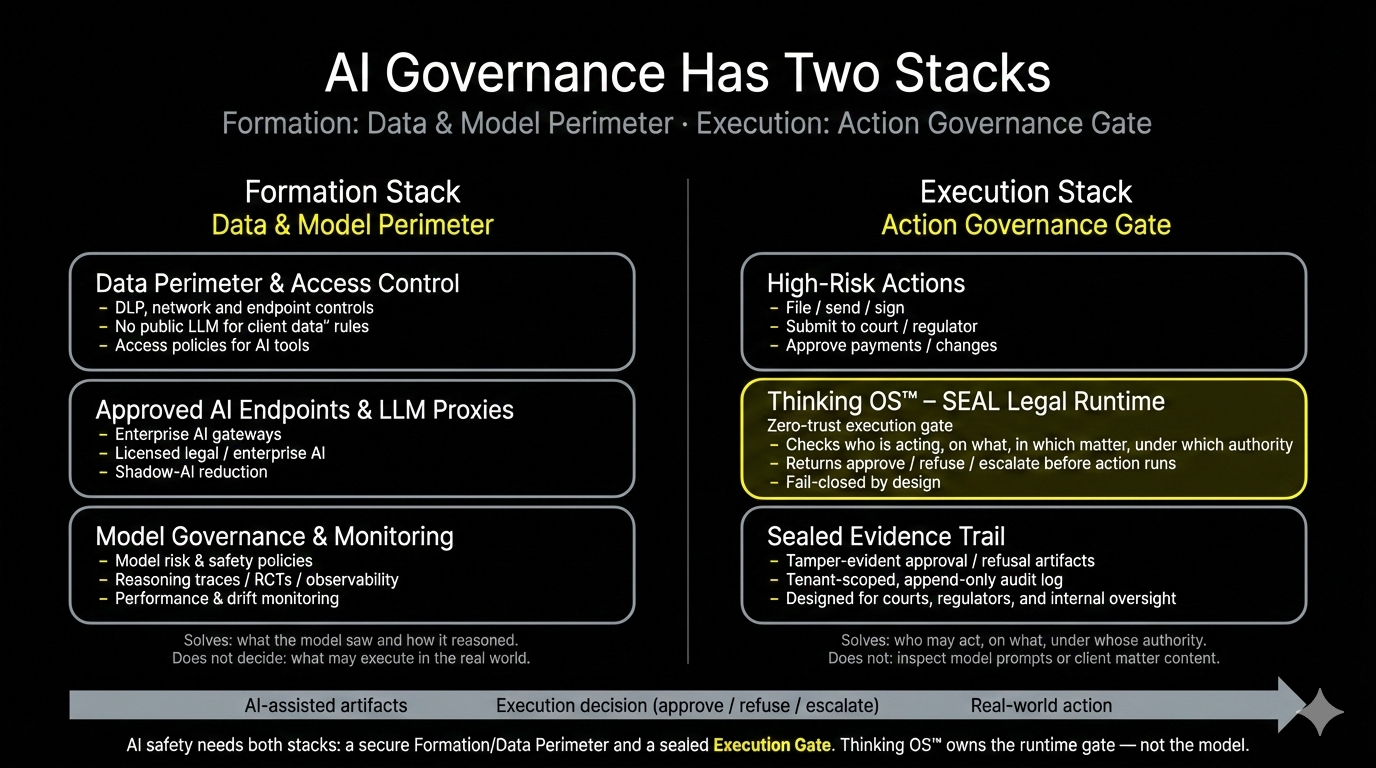

AI governance really lives in two stacks:

- the data perimeter

- the pre-execution gate.

Most organizations are trying to solve both with one control — and failing at both.

Thinking OS™ deliberately owns only one of these stacks: the

pre-execution gate.

Shadow AI, DLP, and approved endpoints live in the

data perimeter.

Once you separate those, a lot of confusion about “what SEAL does” disappears.

Stack 1: The Data Perimeter (Formation Stack)

This is everything that governs how reasoning or code is formed in the first place:

- DLP and data-loss controls

- Network / endpoint controls that block uploads to unsanctioned AI

- Enterprise AI proxies / approved LLM endpoints

- “No public LLM for client data” policies and training

These controls answer questions like:

- “Did an associate paste client content into a public chatbot?”

- “Did a developer push source code to an unapproved LLM?”

That’s a

data perimeter problem.

It’s critical — and it is

not what Thinking OS™ is designed to solve.

By design, SEAL:

- never sees prompts, model weights, or full matter documents

- does not sit in the traffic path between staff and public AI tools

- does not claim to stop data exfiltration to public models

Those risks are handled by your security stack, not by our governance runtime.

Stack 2: The Pre-Execution Gate (Runtime Stack)

The second stack is where Thinking OS™ lives.

This stack governs which actions are even allowed to execute inside your environment — regardless of how the draft or reasoning was formed.

For SEAL Legal Runtime, that means:

- It sits in front of file / submit / act for wired legal workflows.

- Your systems send a structured filing intent (

who / what / where / how fast / with what authority). - SEAL checks that intent against your IdP and GRC posture (role, matter, vertical, consent, timing).

- It returns a sealed approval, refusal, or supervision-required outcome.

Inside the runtime:

- There is no alternate path that can return “approved” without those checks.

- Ambiguity or missing data leads to a fail-closed refusal, not a silent pass.

- Every decision (approve / refuse / override) emits a sealed, hashed artifact into append-only audit storage under the firm’s control.

This is action governance, not model governance:

“Is this specific person or system allowed to take this specific action,

in this matter, under this authority — yes, no, or escalate?”

If the answer is “no”, the filing or action never runs under the firm’s name.

Why We Don’t Pretend to Own Formation

In our conversation, the security architect raised the hard case:

“Associate pastes privileged content into free ChatGPT.

Your execution gate never sees it. The damage happened at formation.”

He’s right about the risk — and right that this is outside SEAL’s remit.

So we draw a clean line:

- Data exfiltration to public models → handled by DLP, network policy, AI access controls, and training.

- Unlicensed logic turning into real-world legal actions → handled by SEAL as the sealed pre-execution authority gate in front of file / submit / act.

That boundary is intentional:

- We don’t claim to prevent every misuse of public AI.

- We do make sure that, inside the firm’s own stack, high-risk actions are structurally impossible to execute without passing a zero-trust, fail-closed gate — and that there’s evidence when they do.

In practice, clients pair the two:

Data perimeter controls + SEAL at execution

= both the data leak and the action surface are governed.

What the Sealed Artifact Actually Buys You

The piece that resonated most with engineers was the audit posture:

- Every approval / refusal / override has a trace ID, hash, and rationale (anchors + code family).

- Artifacts are written to append-only, client-owned storage; SEAL never edits in place.

- Regulators and auditors test SEAL by sending scenarios and inspecting outputs, not by inspecting internal logic.

That means:

- If a workflow is wired to SEAL, every governed action leaves evidence.

- If something high-risk happens

without a SEAL artifact, that absence is itself a signal:

“This moved outside the gate. Go investigate.”

You don’t catch workarounds by hoping they never occur.

You catch them because the

evidence trail has a hole.

For CISOs, GCs, and Engineers: How to Explain This in a Meeting

If you need the 30-second version for a board, a partner meeting, or a security review, use this:

1. AI governance has two stacks.

- Data perimeter — who can use what AI, with which data.

- Execution gate — which actions are allowed to run at all.

2. Thinking OS™ (via SEAL Legal Runtime) owns the pre-execution authority gate.

It sits in front of file / submit / act, checks identity, matter, motion, consent, and timing, and then returns approve / refuse / escalate with a sealed artifact for every decision.

3. Shadow AI is handled at the data perimeter.

SEAL never touches prompts or full matter content by design; it governs what those drafts are allowed to do, not how they were written.

If you keep those three sentences straight, you won’t oversell what we do — and you won’t underestimate what it gives you.

Why This Matters Beyond Legal

We’re proving this first in law because it’s the hardest place to start:

strict identity, irreversible actions, overlapping rules, and audit that has to stand up in court.

But the pattern generalizes:

- Formation stack → where reasoning and code are created.

- Execution stack → where systems are allowed to act under your name.

Thinking OS™ is refusal infrastructure for that second stack: a sealed, runtime judgment layer that turns “we have policies” into

“we have a pre-execution authority gate this action cannot bypass.”