Refusal Infrastructure for Legal AI

SEAL Legal Runtime from Thinking OS™ is a sealed governance layer in front of high-risk legal actions.

At runtime—before anything is filed, sent, or executed—it decides whether an action may proceed, must be refused, or requires supervision.

Why Legal Needs This

In law, the problem isn’t just what AI says — it’s what gets filed, sent, or approved under your name.

At its core, Thinking OS™ answers one question:

“Who may act, on what, under whose authority?

This is Action Governance™ – the missing discipline in legal AI.

Thinking OS™ enforces this question before anything is filed, sent, or submitted—across humans, AI tools, and automated workflows.

Think of it as a seatbelt for legal workflows:

rarely visible, impossible to ignore when an

unsafe action is about to happen.

The First Principle of Legal AI Governance

Every legal workflow ultimately reduces to three governed variables:

• Who may act

• On what

• Under whose authority

Thinking OS™ operationalizes this principle at runtime as a

pre-execution authority gate for high-risk actions.

We call this

Action Governance™.

How Leaders Describe the

Missing

Governance Layer

Analysts, GRC engineers, and general counsel keep landing on the same point:

the real risk isn’t what AI says, it’s

who is allowed to let an action run at all.

“Seeing your work on Refusal Infrastructure with Thinking OS, you’ve nailed the friction point for 2026. The 5 pillars rely on a missing foundational layer: The Guardian — a distinct market category whose job is to audit the doing agents before they act.

The move is from Probabilistic Governance (hoping the model follows the prompt) to Deterministic Refusal (runtime infrastructure that blocks the action). That pre-execution gate is what lets us move from HITL cleanup to HOTL policy-setting.”

Director Analyst, Gartner

“Our current design isn’t the same as a pre-execution gate that evaluates each action in real time and produces a sealed authorization record.

That’s the difference between

‘this agent had permission’ and

‘this specific action was authorized by the right person under the right policy at the right time.’”

GRC Engineering Director · Building AI Agents for GRC Automation · SOC 2 Expert

“What you describe as a

pre-execution authority gate is the practical answer to the

context problem. Once agents act across tools and audiences, privacy can’t live only in the model or content classification — it has to live in a control layer that can say

‘this action is out of scope’ even if the content is correct.

That’s where

privacy, authority, and accountability really meet.”

VP General Counsel · Tech, AI Security & Safety · Governance & Privacy · CIPP/E

See more commentary & analysis from GCs, GRC leaders, and founders →

What It Does

Think of Thinking OS™ as:

A referee

It blows the whistle when rules are broken.

A lockbox

Once sealed, nothing inside can be altered.

A gatekeeper

It checks what enters against your rules before the system ever runs.

Thinking OS™ never drafts, files, or signs anything.

It only authorizes, refuses, or routes actions—and preserves the evidence.

Structural Truth:

How it works, structurally:

- Embedded as a sealed governance layer in your stack.

- Creates sealed artifacts for every governed decision—approved, refused, or supervised override.

- Artifacts are hashed, signed, and auditable — carrying only the minimum data needed for legal, audit, and regulatory review.

- Governs

at the execution boundary — before high-risk actions (file / send / approve / move) are triggered, and before errors can leave your systems.

For Law Firms:

Every governed decision produces a sealed, tamper-evident artifact designed to support audit, regulatory, and court review.

Privilege remains protected. Oversight trails remain intact.

For Legal Tech Vendors:

Plug-in governance without re-engineering your models.

Thinking OS™ enforces approval, refusal, and supervised override upstream—so liability is contained in sealed decision artifacts for every governed decision, not scattered across logs or prompts.

What It Does

Think of Thinking OS™ as:

A referee

It blows the whistle when rules are broken.

A lockbox

Once sealed, nothing inside can be altered.

A gatekeeper

It checks what enters against your rules before the system ever runs.

Thinking OS™ never drafts, files, or signs anything.

It only authorizes, refuses, or routes actions—and preserves the evidence.

Structural Truth:

How it works, structurally:

- Embedded as a sealed governance layer in your stack.

- Creates sealed artifacts for every governed decision—approved, refused, or supervised override.

- Artifacts are hashed, signed, and auditable — carrying only the minimum data needed for legal, audit, and regulatory review.

- Governs

at the execution boundary — before high-risk actions (file / send / approve / move) are triggered, and before errors can leave your systems.

Proof of Trust

Here’s what you (and your GC) can count on:

01

Immutable Decision Artifacts

→ Every governed decision—especially refused actions—generates a sealed, tamper-evident decision artifact.

02

Audit-Ready Evidence

→ Artifacts are structured for regulatory, insurer, and internal review—without exposing underlying prompts or model traces.

03

Privilege Protection

→ Only governance anchors and reason codes are recorded. Client matter content remains outside the artifact.

04

Enterprise Security Posture

→ Deployed in hardened environments with strict isolation between client context and sealed logic.

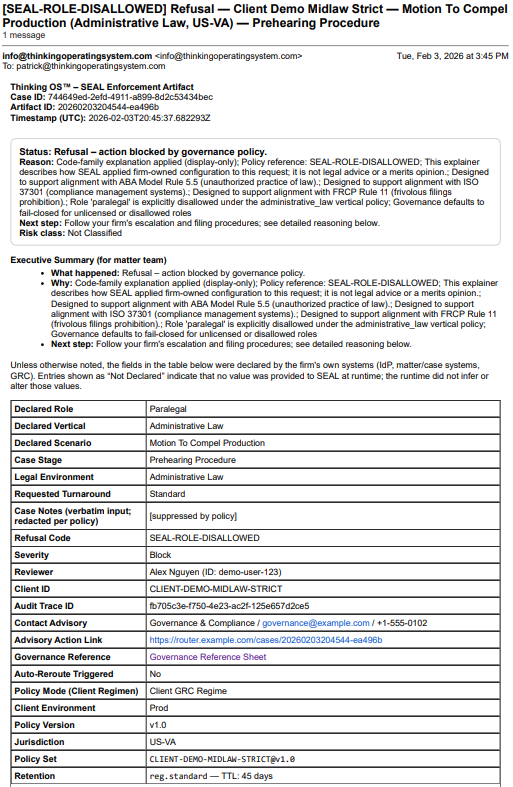

Sealed Artifact, Not a Screenshot

This is a real refusal artifact generated by Thinking OS™ when a paralegal tried to file a motion in a venue where only licensed counsel may file. The 𝗽𝗿𝗲-𝗲𝘅𝗲𝗰𝘂𝘁𝗶𝗼𝗻 𝗮𝘂𝘁𝗵𝗼𝗿𝗶𝘁𝘆 𝗴𝗮𝘁𝗲 refused the action and produced this sealed record: who acted, on what matter, under which policy/authority, and why the action was blocked.

This is what it looks like when the 𝗲𝘅𝗲𝗰𝘂𝘁𝗶𝗼𝗻 𝗴𝗮𝘁𝗲 actually says “no” and leaves evidence.

TheSEAL Legal runtime blocked the action and sealed this decision record:

who acted, what they attempted, which rules fired, and why the filing was refused—all anchored by a tamper-evident hash.

It’s evidence-grade governance documentation designed to withstand regulatory, insurer, and court scrutiny.

Pilot With Confidence — Early Access Program

- Time-boxed enforcement window in

1–3 wired workflows (Coverage Map)

- Limited early-access pricing for design partners (credited toward any future license)

- One sealed legal domain at a time (defined with the firm): e.g., Civil Litigation, Corporate & Business, IP, Immigration.

- Shared sealed decision artifacts only (approval / refusal / override) — no prompts, no model traces, no internal enforcement logic; no IP exposure

- Throughout the pilot, SEAL can only approve, refuse, or route for supervised override.

- It never drafts documents or files on its own.

At the end of a SEAL Pilot, you have:

- A live enforcement boundary in one legal domain

- Real approval and refusal artifacts from live workflow conditions (firm-controlled)

- A clear record of what was governed—and why

- The option to move into an ongoing license for wired enforcement + sealed evidence, with zero model or IP exposure

For Law Firms

- Protect client privilege with sealed refusal and approval artifacts tied to each governed action.

- Show regulators, insurers, and courts evidence-ready governance records when questions arise.

- Embed refusal upstream — malpractice and out-of-scope risk are stopped at the gate, not discovered after the fact.

For Legal Tech Vendors

- Plug into your stack with a simple API in front of high-risk actions.

- Contain liability without retraining or rebuilding your models.

- Keep your UX yours — Thinking OS™ stays upstream as a sealed judgment layer, not a competing product.

Bottom Line

You don’t need another model. You need refusal infrastructure.

Thinking OS™ is engineered so that actions which should never run are refused under the seal. No ungoverned drift. No silent contradictions. No quiet tampering with the record.

The Judgment Layer™ (Insights)