The Three Phases of AI Governance

Why “PRE, DURING, AFTER” Is the

Only Map That Makes Sense Now

Most people talk about “AI governance” like it’s a single thing.

It isn’t.

If you don’t separate when governance shows up, you will:

- buy the wrong tools,

- overestimate your safety, and

- still get blindsided when something irreversible happens fast.

The clean way to see it is simple:

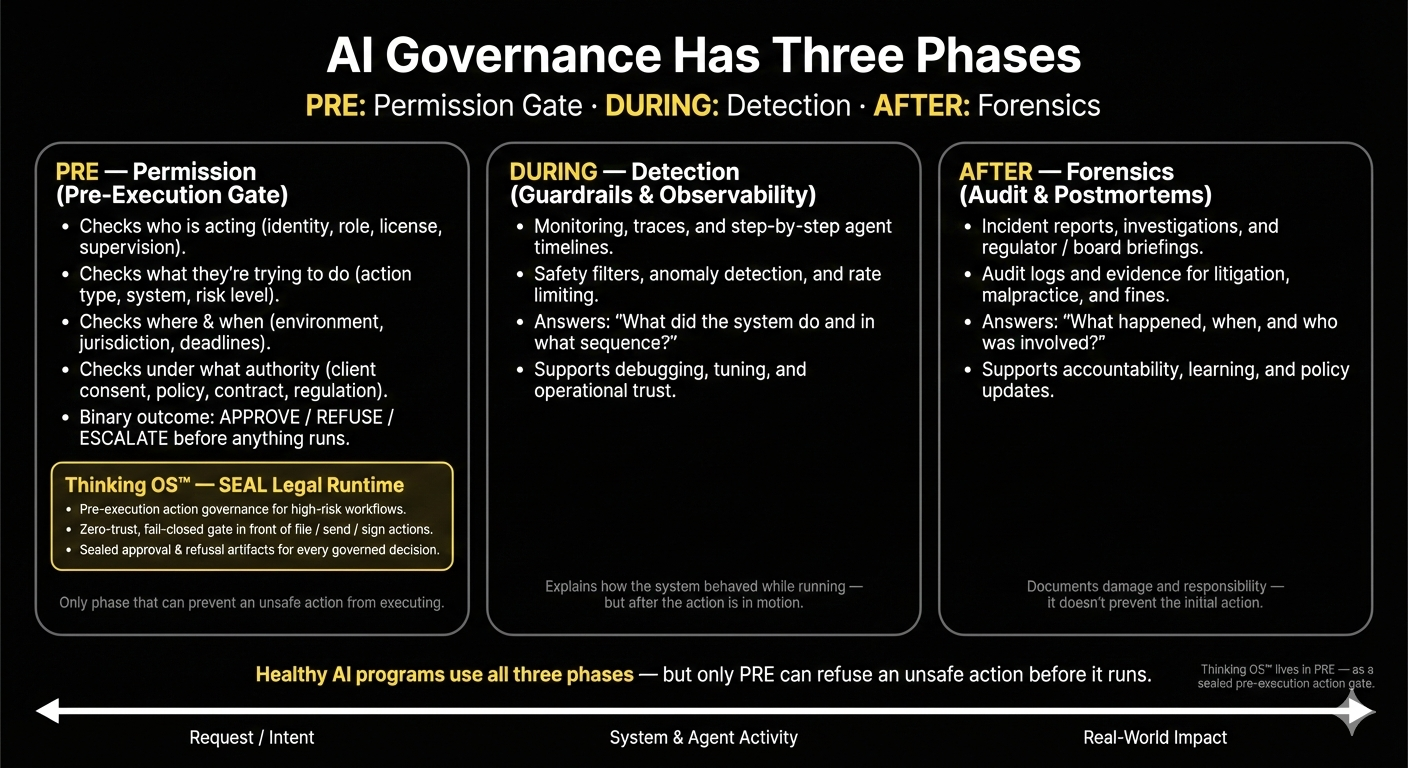

AI governance has three phases: PRE, DURING, and AFTER.

And only one of them actually prevents an unsafe action from running.

Let’s map them in plain English.

AI Governance Phase 1 — PRE: Permission (the Gate)

PRE is everything that happens before an action runs.

This is the moment that decides whether an email gets sent, a database gets touched, money moves, or a filing hits a court at all.

The only question that matters at PRE is:

“Is this person or system allowed to take this action, in this context, under this authority — yes or no?”

If the answer is no, the system must:

- refuse the action, or

- escalate it for review

…before anything executes.

That’s it. That’s PRE.

What PRE really does

A real PRE layer:

- Checks who is acting (identity, role, license, supervision).

- Checks what they’re trying to do (action type, risk level, system touched).

- Checks where and when they’re doing it (jurisdiction, environment, deadlines).

- Checks under what authority (client consent, policy, contract, regulation).

And then it makes a binary call:

- ✅ allowed to run

- ❌ refused or escalated

Not “we’ll log it.”

Not “we’ll warn them.”

A hard

stop/go.

Why PRE is so rare

Most stacks today have:

- authentication (you can log in), and

- authorization (you can see things, click things).

Very few have governed permission at the moment of action.

That’s why we keep seeing stories where:

- A model was originally approved for one purpose, then silently used for another.

- An agent deletes or alters data it never should have touched.

- An AI tool sends something externally that was meant to stay inside the firewall.

In each of those cases, the real failure wasn’t the model.

It was the absence of a

pre-execution authority gate.

AI Governance Phase 2 — DURING: Detection (Guardrails + Observability)

DURING is everything that happens while the system is running.

This is where most “AI governance” tools live today:

- monitoring, traces, logs

- “step-by-step” agent timelines

- anomaly detection and policy checks

- rate limits and safety filters

DURING answers:

- “What did the system do?”

- “What sequence of steps did the agent take?”

- “Did anything look unusual in this run?”

This is useful. You need it.

But for irreversible actions, DURING is often too late.

If an AI agent has already:

- denied 1,000 credit applications,

- overwritten a production database, or

- sent privileged content outside the firm,

then seeing a beautiful trace of how it did that doesn’t un-do the outcome.

DURING is necessary for:

- debugging

- tuning

- operational trust

…but it is not the same as governing whether the action should have been allowed.

AI Governance Phase 3 — AFTER: Forensics (Audit + Postmortems)

AFTER is everything that happens once you realise you have a problem.

This includes:

- incident reports

- internal investigations

- breach notifications

- regulator and board briefings

- litigation and malpractice defense

AFTER answers:

- “What happened?”

- “When did it happen?”

- “Who was involved?”

- “What controls did we have on paper?”

AFTER is essential for:

- accountability

- learning

- regulatory trust

But we should be honest about what it is:

AFTER is damage accounting, not prevention.

You need it. Regulators will demand it. Insurers will ask for it.

But if your governance only shows up in the AFTER phase, you’re not governing decisions — you’re documenting them.

Where Most “AI Governance” Lives Today

When you strip away the marketing language, most “AI governance” in the market is:

- DURING (monitoring, guardrails, observability), and

- AFTER (audit trails, compliance dashboards, reports).

Those are valuable.

But the failures that make headlines — and the ones that keep GCs, CISOs, and boards awake — are almost always PRE failures:

- A system was allowed to act under the wrong authority.

- An agent executed outside of its intended scope.

- A model was quietly repurposed without a fresh approval.

Everyone only notices after the blast radius.

By then, DURING and AFTER can explain and document what happened.

Neither can say:

“This action was never permitted to run under our seal.”

That claim lives in PRE.

Authority vs Audit: Where Real Governance Lives

Here’s the distinction most boards miss:

- Auditability asks:

“Can we explain what happened?” - Authority asks:

“Was this action ever allowed to happen?”

You can have perfect DURING + AFTER:

- full traces,

- detailed logs,

- clean incident reports,

…and still have no answer to the real question:

“Who authorized this specific action, under what rules, and why didn’t anything stop it?”

Real governance lives where:

- authority is checked, not assumed;

- refusal and escalation are first-class options, not UX annoyances;

- the system is structurally capable of saying: “No, not under these conditions.”

The Simple Test for Any “AI Safety” Claim

When you evaluate any AI safety, governance, or agent framework, ask this one question:

“Can you refuse an out-of-scope action before it runs — even when the user asks nicely and the model is confident?”

If the answer isn’t a clean “yes”, they’re mostly operating in DURING and AFTER.

And that’s fine —

until something irreversible happens fast.

How to Use PRE / DURING / AFTER AI Governance Inside Your Organization

You don’t have to be technical to apply this.

1. Map your current controls

For a given AI system, literally draw three columns:

- PRE (Permission)

- DURING (Detection)

- AFTER (Forensics)

Then ask:

- What do we have in PRE today that can actually refuse or escalate an action?

- What are we doing DURING runs (monitoring, alerts, traces)?

- What shows up AFTER (logs, reports, postmortems)?

Most orgs discover:

- DURING: crowded

- AFTER: decent

- PRE: almost empty

That’s your exposure.

2. Clarify who owns PRE

Someone needs to own the answer to:

“Who is allowed to do what, where, and under whose authority — and what must never run at all?”

Depending on the context, that might be:

- GC / legal

- risk / compliance

- security / CISO

- business owner for the domain

But PRE cannot be “owned by the model” or “left to the vendor.”

It must be owned by the institution.

3. Demand hard gates, not just better dashboards

When a vendor says “we do AI governance,” ask them:

- “Show me the PRE layer in your solution. Where is the gate?”

- “What exactly happens when an out-of-policy action is attempted?”

- “Do you log it, warn about it, or actually refuse it?”

If everything they show you lives in DURING and AFTER, you know what you’re buying:

- great visibility,

- better paperwork,

- no real brake.

Where Thinking OS™ Sits

Thinking OS™ exists because PRE is missing almost everywhere.

We don’t tune models, train agents, or design prompts.

We focus on one thing:

Pre-execution authority gate.

An upstream gate that helps ensure certain actions don’t run at all unless conditions are met.

- If the identity, scope, consent, or authority checks fail → refuse or escalate, with a sealed record.

- If the checks pass → let existing tools run, with a sealed record of why.

Not “better prompts.”

Not “more dashboards.”

Not “we’ll audit it later.”

A boundary.

The New Baseline for Trust

In the next wave of AI adoption, the question won’t be:

- “Do you have AI?”

- “Do you have a governance dashboard?”

It will be:

“Show me where your system can prove that certain actions were never allowed to run under your seal.”

PRE, DURING, and AFTER all matter.

- DURING scales performance.

- AFTER supports accountability.

- PRE is where real governance lives.

That’s the piece most organizations are missing.

And that’s the piece you just put your name on.