The Missing Layer in the Agentic AI Revolution

Why Every New AI Standard

Still Leaves Enterprises Exposed

Over the past week, the world’s largest AI companies announced the first “constitution” for agentic AI: a shared set of protocols designed to make autonomous systems interoperable, predictable, and safe.

This is an important milestone.

Open standards for:

- tool access,

- context sharing,

- project-aware instructions,

- and multi-agent scaffolding

…are necessary for the ecosystem to function.

But even as the stack becomes more coordinated, something deeper is still missing.

Not from any one company.

Not from any one standard.

But from the entire conversation.

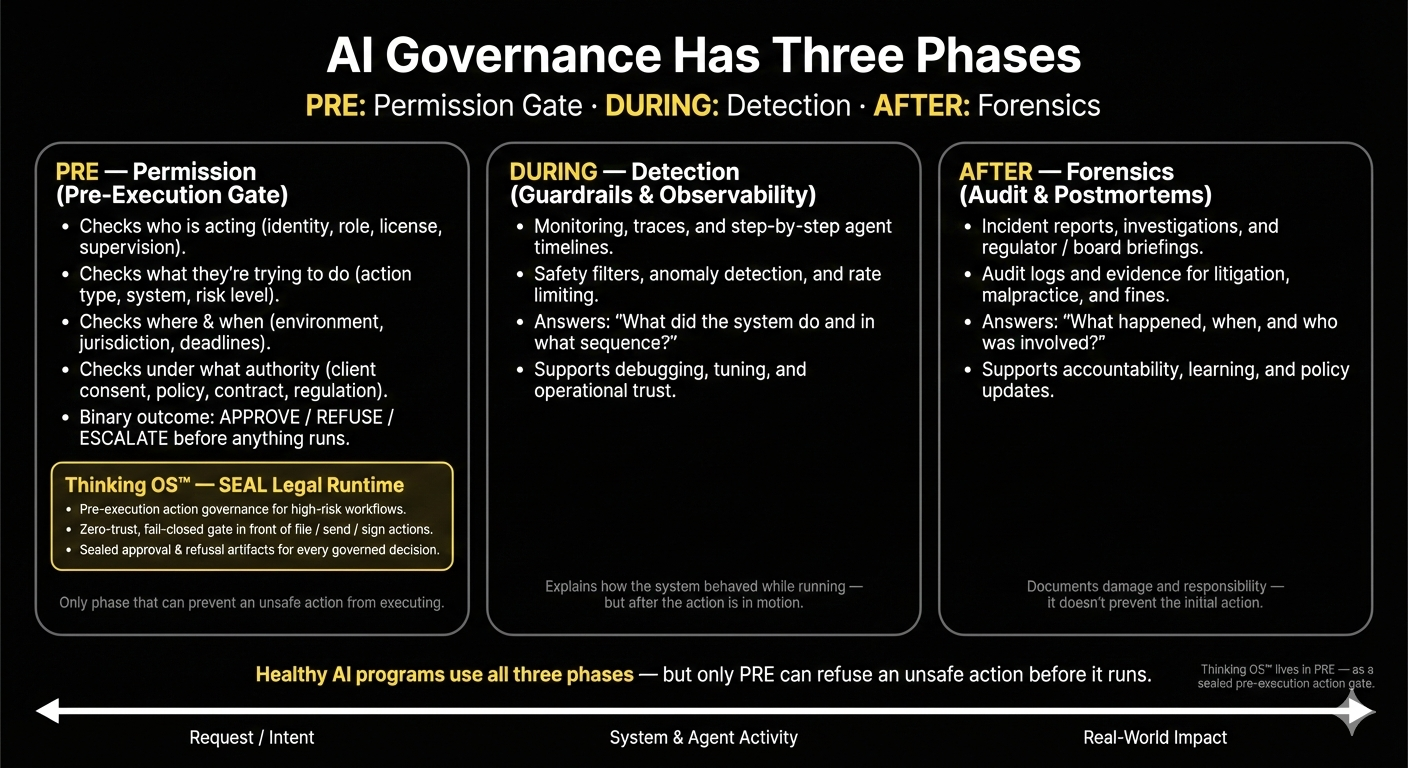

1. AI Infrastructure Is Solving Capability. Enterprise Risk Lives in Authority.

Most of the agentic ecosystem is focused on what agents can do:

- how they plan,

- how they collaborate,

- how they call tools,

- how they read codebases,

- how they exchange context.

These are technical questions.

But enterprise liability doesn’t begin with capability.

It begins with permission.

Every consequential event in an organization — a filing, a notice, a transfer, a message, an approval — rests on a single upstream question:

Who is allowed to do this, under what authority, in this context, at this moment?

No agent standard answers that question yet.

And until it does, enterprises will continue to absorb risk that can neither be priced, explained, nor defended.

2. Standards Coordinate Behavior. They Do Not Govern Action.

Interoperability solves fragmentation.

It does not solve accountability.

Even with perfect standards, an enterprise still lacks:

- a boundary where identity is validated,

- a check on role-based authority,

- a verification of context and consent,

- a refusal mechanism when something is wrong,

- and a sealed record of the decision itself.

These are not workflow conveniences.

They are governance necessities.

Without this layer, any organization deploying autonomous agents inherits the same exposure:

A system can act faster than oversight can understand it.

This is the structural gap insurers are signaling.

It is the reason regulators are accelerating.

It is the friction boards are beginning to name.

3. The First Crisis of Agentic AI Will Not Be Technical. It Will Be Forensic.

In every major AI incident to date, the failure was not:

- the model,

- the protocol,

- or the orchestration framework.

The failure was the aftermath.

Most organizations cannot reconstruct:

- who initiated an action,

- whether they were authorized,

- what governance should have prevented it,

- or why the system moved at all.

When evidence is missing, accountability collapses.

And when accountability collapses, risk becomes uninsurable.

This is the gap no protocol — MCP, AGENTS.md, Goose, or anything that follows — is designed to close.

Because it sits above the infrastructure and before the agent.

4. The Next Layer the Industry Will Need Is Not More Intelligence. It Is a Judgment Perimeter.

As agentic systems mature, enterprises will require a constitutional layer — not for the agents, but for themselves.

A boundary (pre-execution authority gate) that:

- checks identity,

- checks role,

- checks authority,

- checks context,

- refuses when conditions fail,

- and produces a tamper-evident artifact for every attempted action.

A system does not become safer because it is smarter.

It becomes safer because its actions are

governed before they occur and provable after they do.

This is the layer missing from every existing standard.

Not because the leaders in this space lack vision.

But because responsibility for enterprise decisions does not live with them.

5. The Agentic Future Needs Two Constitutions.

The AI industry is now building the first:

A constitution for how agents behave.

But enterprises need the second:

A constitution for how authority is validated before action.

Without both, organizations will continue to experience:

- reflex mismatches between system speed and human oversight,

- unexplainable decisions,

- uninsurable exposures,

- and governance gaps that appear only after the damage is done.

The evolution of agentic AI is inevitable.

The evolution of enterprise governance must be too.