When the State of Virginia Becomes Agentic: Why the Pre-Execution Gate Has to Come Before the Model

Virginia just crossed a threshold most people don’t have language for yet.

On July 9th, Governor Glenn Youngkin issued

Executive Order 51, A new executive order puts AI agents to work inside the state’s regulatory process—not as a chatbot on a website, but as part of how laws and rules are reviewed.

This isn’t just “using AI.”

It’s bringing AI into the machinery that shapes what people and institutions are allowed to do.

What Just Happened

Virginia has authorized AI agents to assist with reviewing its regulatory environment. These agents can:

• Scan statutes, regulations, and guidance documents.

• Flag contradictions, redundancies, and streamlining opportunities.

• Operate across agencies, surfacing drift across large bodies of text.

In other words, AI is now sitting much closer to

regulatory judgment. It’s not just drafting memos; it’s shaping what shows up on a policymaker’s desk.

Why This Moment Matters

This isn’t just automation.

It’s the beginning of

agentic infrastructure at the policy layer: systems that don’t just answer questions, but help decide what moves forward.

Once AI touches that surface, “AI governance” stops being a slide deck problem and becomes an

architecture problem.

The Real Risk Isn’t “AI Error.” It’s What Gets to Execute.

The practical question for states isn’t “Should we use AI?”

It’s:

“Once AI is embedded in our workflows, what can it actually cause to happen?”

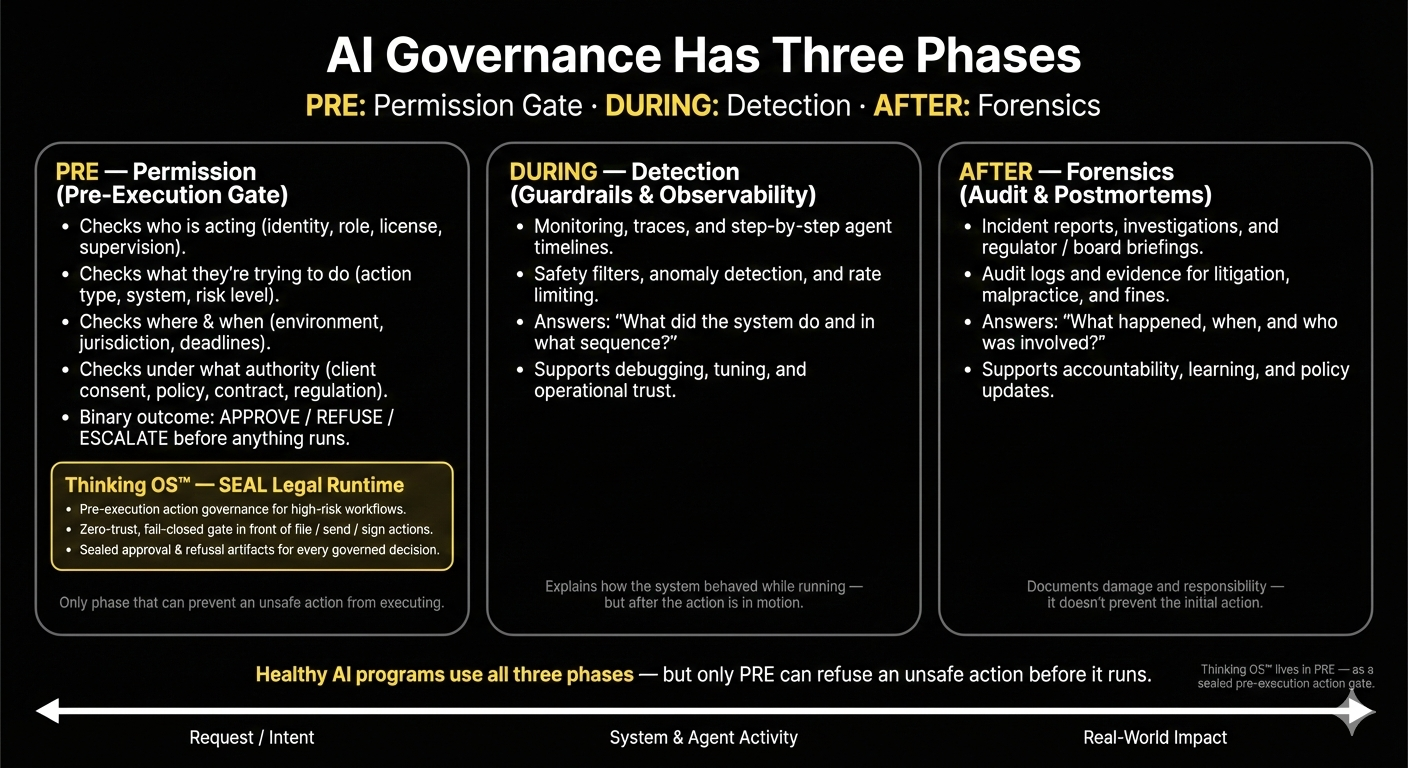

Most controls today live during and after execution:

– monitoring, logs, and traces,

– post-hoc reviews and investigations.

What’s missing in many stacks is a pre-execution gate for high-risk actions—a place in the architecture that can say:

“This action cannot run under this identity, in this context, under this authority.”

Until refusal is enforceable before an action executes, governance is mostly watching and documenting, not governing.

What Virginia Has Done Right

✅ Naming AI explicitly at the policy layer.

✅ Treating agents as part of regulatory review, not just public UX.

✅ Framing this as transformation, not a one-off tool.

The open question for any state taking this step is:

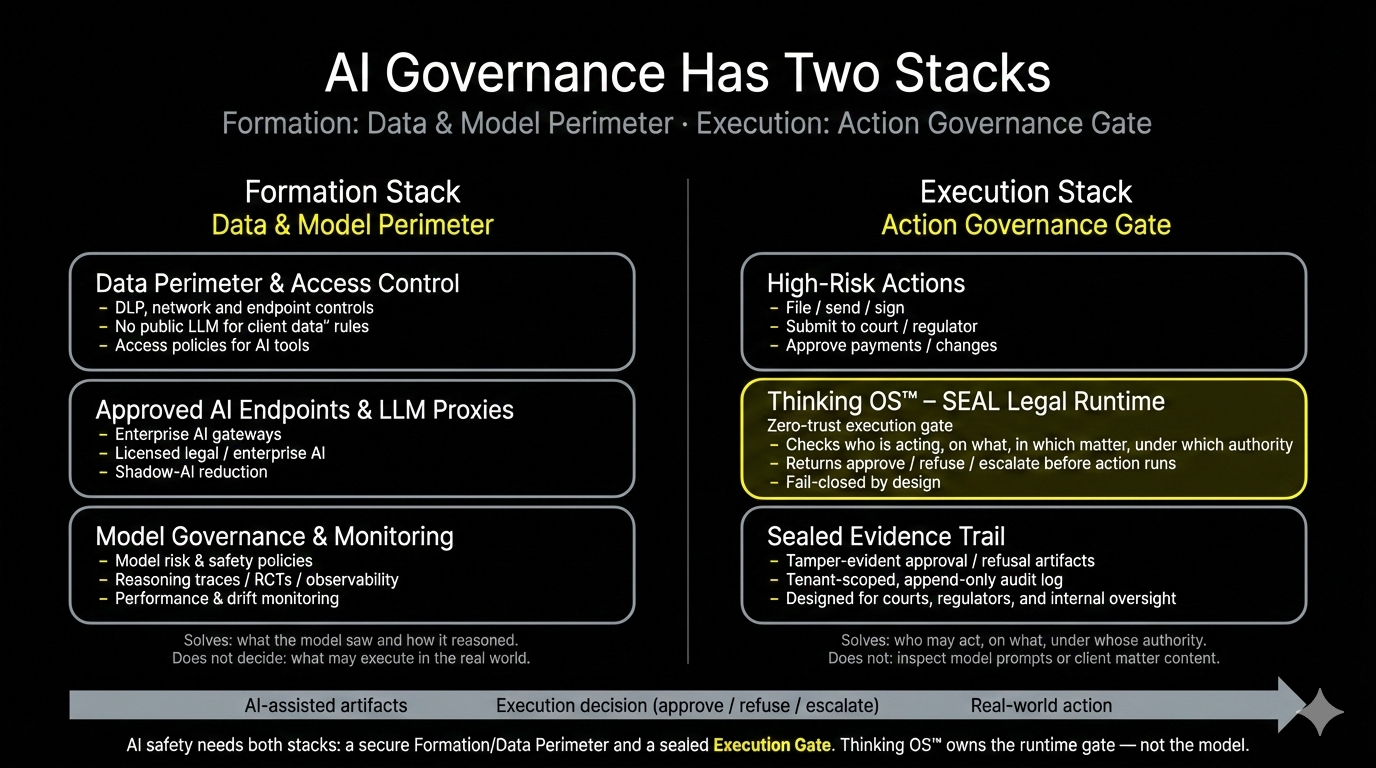

– Where is the

data and model perimeter

that controls which AI tools can see which records?

– Where is the

action governance gate that decides which AI-touched actions are allowed to file, send, publish, or approve anything under the state’s name?

Both stacks are needed. Most early deployments are still light on the second.

Where Thinking OS™ Fits in This Picture

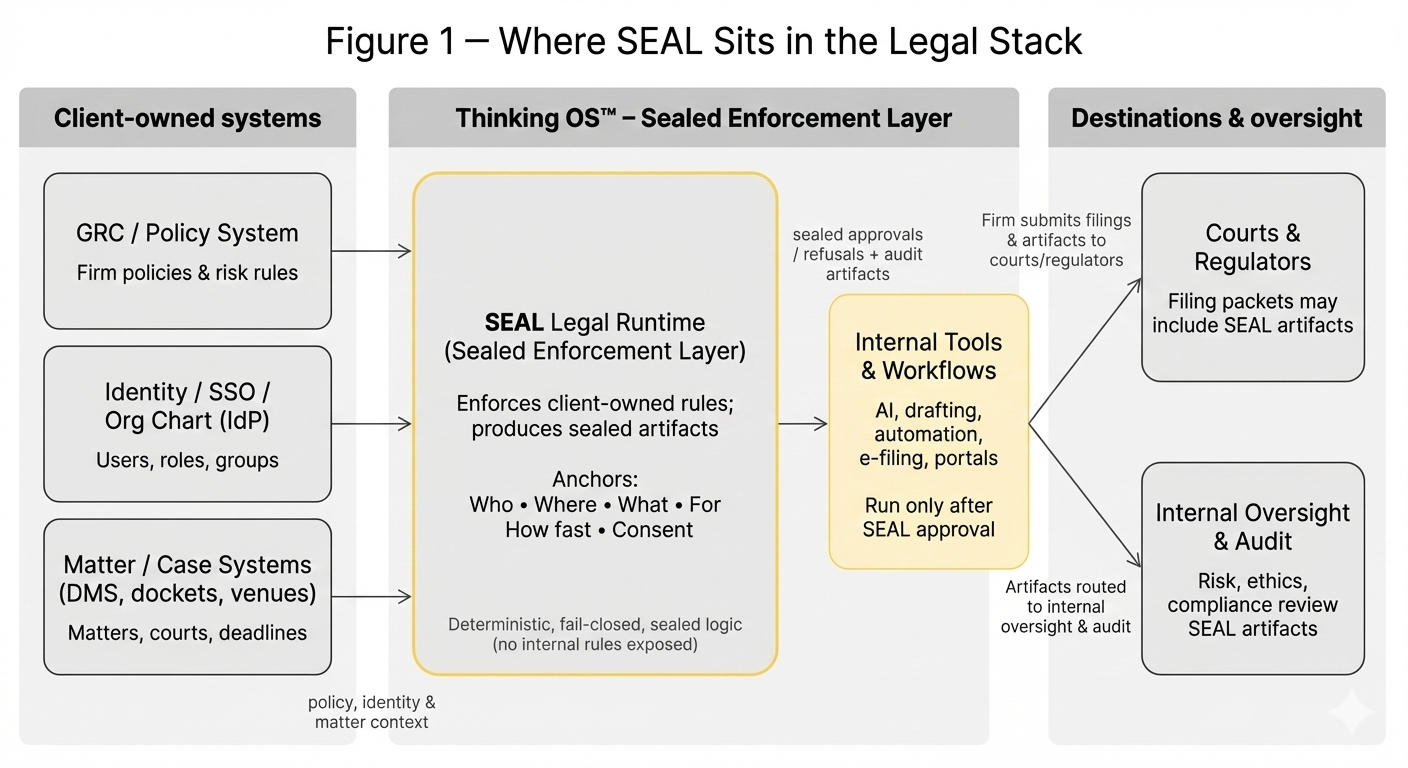

We don’t run Virginia’s stack, and SEAL Legal Runtime is focused on law, not state-wide policy engines. But the architectural pattern is the same.

Thinking OS™ is refusal infrastructure for legal AI:

– a sealed governance layer that sits in front of high-risk legal actions (file / send / sign / submit),

– checks who is acting, on what matter, under which authority,

– then returns

approve / refuse / escalate before anything is filed, sent, or approved.

We don’t tune models or draft documents.

We govern

what AI-touched work is allowed to do under a firm’s or lawyer’s name—and leave behind sealed evidence of each decision.

For states experimenting with agentic AI at the policy layer, the same principle applies:

data perimeters protect what models can see;

action gates protect what AI can cause to happen.

This Is Not Just a State Experiment. It’s a Signal.

Once governments and enterprises let AI into the surfaces that shape policy, law, or money flow, the questions change:

– Who is allowed to act under our seal?

– Which AI-touched actions are allowed to leave the building?

– Where is the proof of what we approved, refused, or escalated?

Because when systems become agentic, governance has to move to the execution boundary, not stay in the slide deck.

Where we’ve started is law—the hardest environment for identity, authority, deadlines, and evidence that must stand up in court and under regulator scrutiny.

Thinking OS™ is

refusal infrastructure for legal AI:

a sealed runtime that sits in front of high-risk legal actions, refuses what should never run, and proves what did.

Data perimeters protect what models see.

Refusal infrastructure governs what they’re allowed to do.