The Layer They Won’t Build: Why Thinking OS™ Is the Judgment They’re Not Designed For

The Trap They Can't See

Every AI company is racing to release agents, copilots, and chat-based interfaces. Billions are being poured into model development, vector routing, and agentic frameworks. And yet, with all this motion, none of them have cracked the core question:

How do we decide what to do, when, and why?

They’ve built systems that act, but not systems that

think.

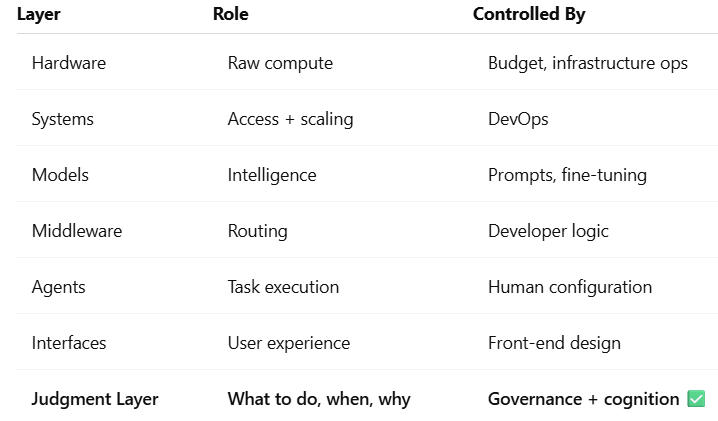

The Infrastructure Stack: What Everyone Else Is Building

Let’s look at the typical AI system, layer by layer:

1. Hardware Layer (Physical Infrastructure)

- What it is: GPUs, TPUs, CPUs (e.g., NVIDIA A100s, Google TPUs)

- Vendors: NVIDIA, AMD, Intel, AWS, GCP, Azure

- Purpose: Raw compute power for training and running models

2. Systems/Cloud Infrastructure Layer

- What it is: Virtual machines, containers, orchestration tools like Docker and Kubernetes

- Purpose: Scaling, networking, CI/CD

- Vendors: AWS, Azure, GCP, OCI

3. Model Layer

- What it is: Pre-trained or fine-tuned LLMs like GPT-4, Claude, PaLM, Mistral

- Purpose: Text generation, prediction, classification, summarization

- Vendors: OpenAI, Anthropic, Google, Meta, Cohere

4. Middleware / Orchestration Layer

- What it is: LangChain, LlamaIndex, vector DBs, routing frameworks

- Purpose: Coordinates tools, memory, RAG, search, reasoning chains

5. Application / Agent Layer

- What it is: Specific AI agents and tools (Jasper, Copilot, Notion AI, Quinn AI)

- Purpose: Domain-specific task execution

6. Interface / UX Layer

- What it is: Chat UIs, dashboards, voice inputs, APIs

- Purpose: The surface where users interact with AI systems

The Missing Layer: Judgment

Thinking OS™ doesn’t sit on top of these layers. It sits in front of their highest-risk actions.

It is not:

– an agent → it governs agents

– a model → it constrains how models are allowed to act

– middleware → it gates what orchestration is allowed to execute

– a UX tool → it enforces boundaries behind the UI

Thinking OS™ is Refusal Infrastructure.

It installs the Judgment Layer as a sealed governance system in front of high-risk actions — deciding which actions may proceed, which must be refused, and which are routed for supervision, and sealing those decisions in auditable artifacts.

Why the Judgment Layer Is the Most Powerful

Because it controls how every other layer is used. Technically, strategically, and cognitively.

The Judgment Layer Decides:

- Which actions are allowed to execute at all

- What models should be used (and how)

- Which tasks are worth doing

- What the desired outcome is

- How to compress noise into clarity

- How to prevent hallucination, drift, and overload

- When not to act

- Who gets to act, and under what conditions

In other words: it’s the layer that turns “we could do this” into “we are (or are not) allowed to do this, under this authority, right now.”

Analogy:

You can have the fastest car (hardware), a tuned engine (models), and the best driver-assist (agents). But the judgment layer is the one who knows where to go, when to brake, and whether the trip is even worth taking.

Why They Can’t Build It

Every major AI initiative has felt that something is missing. But they’re all circling the gap without the ability to fill it.

- They have models, but no mission

- They have agents, but no governors

- They have middleware, but no orchestration of orchestration

- They have dashboards, but no decision compression

Instead, they keep:

- Fine-tuning models (tactic)

- Automating workflows (efficiency)

- Building dashboards (visibility)

- Launching copilots (execution)

But none of these solve:

“How do we think?” “How do we decide — with context, continuity, and consequence?” “How do we prevent self-inflicted complexity at scale?”

Thinking OS™ Cracked It

Instead of adding yet another agent, it:

– Installed a

sealed governance layer in front of high-risk actions

– Structured

pre-execution decision protocols into a runtime that every governed action must pass through

– Ensured each governed action produces a

sealed approval, refusal, or supervised-override artifact

– Escaped the “more prompts, more agents” trap and made refusal and proof installable as infrastructure

Final Truth: Judgment Wins

They’ve been building tools without a judgment layer. We built the governance system that decides which tools are allowed to act at all.

That’s why Thinking OS™ is not an app, model, or plug-in. It’s a sealed governance runtime that sits in front of them.

And the only way to access it now… is to

license the right to route your high-risk actions through it.