Thinking OS™

The Safety Layer for Legal AI

A seatbelt for AI. No matter how advanced the system,

Thinking OS™ refuses unsafe actions before they can reach a court, regulator, or client

Why Legal Needs This

In law, the problem isn’t just what AI says — it’s what gets filed, sent, or approved under your name.

At its core, Thinking OS™ answers one question:

“Who may act, on what, under whose authority?

For Law Firms:

Every decision artifact is sealed, hashed, timestamped, and admissible. Privilege stays protected, audit trails stay intact.

For Legal Tech Vendors:

Plug-in governance with zero re-engineering. Your models stay yours — liability stays sealed inside refusal and approval artifacts, not scattered across logs.

What It Does

Think of Thinking OS™ as:

A referee

It blows the whistle when rules are broken.

A lockbox

Once sealed, nothing inside can be altered.

A gatekeeper

It checks what enters against your rules before the system ever runs.

Thinking OS™ never drafts, files, or signs anything.

It enforces the boundaries you’ve already set — and preserves the proof.

Structural Truth:

- Embedded as a sealed governance layer in your stack.

- Creates refusal artifacts for every blocked or out-of-policy action.

- Artifacts are hashed, signed, and auditable — carrying only the minimum data needed for legal, audit, and regulatory review.

- Governs upstream, before inference, before activation, before error.

The First Principle of Legal AI Safety

Every legal workflow ultimately reduces to three governed variables:

• Who may act

• On what

• Under whose authority

Thinking OS™ operationalizes this principle at runtime — before any model, agent, or human signs, files, or sends anything.

Proof of Trust

Here’s what you (and your GC) can count on:

01

Immutable Refusal Logs

→ every refusal generates a sealed artifact.

02

Audit-Ready Evidence

→ artifacts are admissible in regulatory and litigation settings.

03

Privilege Protection

→ no prompts or model traces exposed; artifacts show governance anchors and codes, not full client matter content.

04

High-Grade Security

→ runs in a hardened cloud environment with enterprise-grade controls; internal models and logic remain fully sealed.

Sealed Artifact, Not a Screenshot

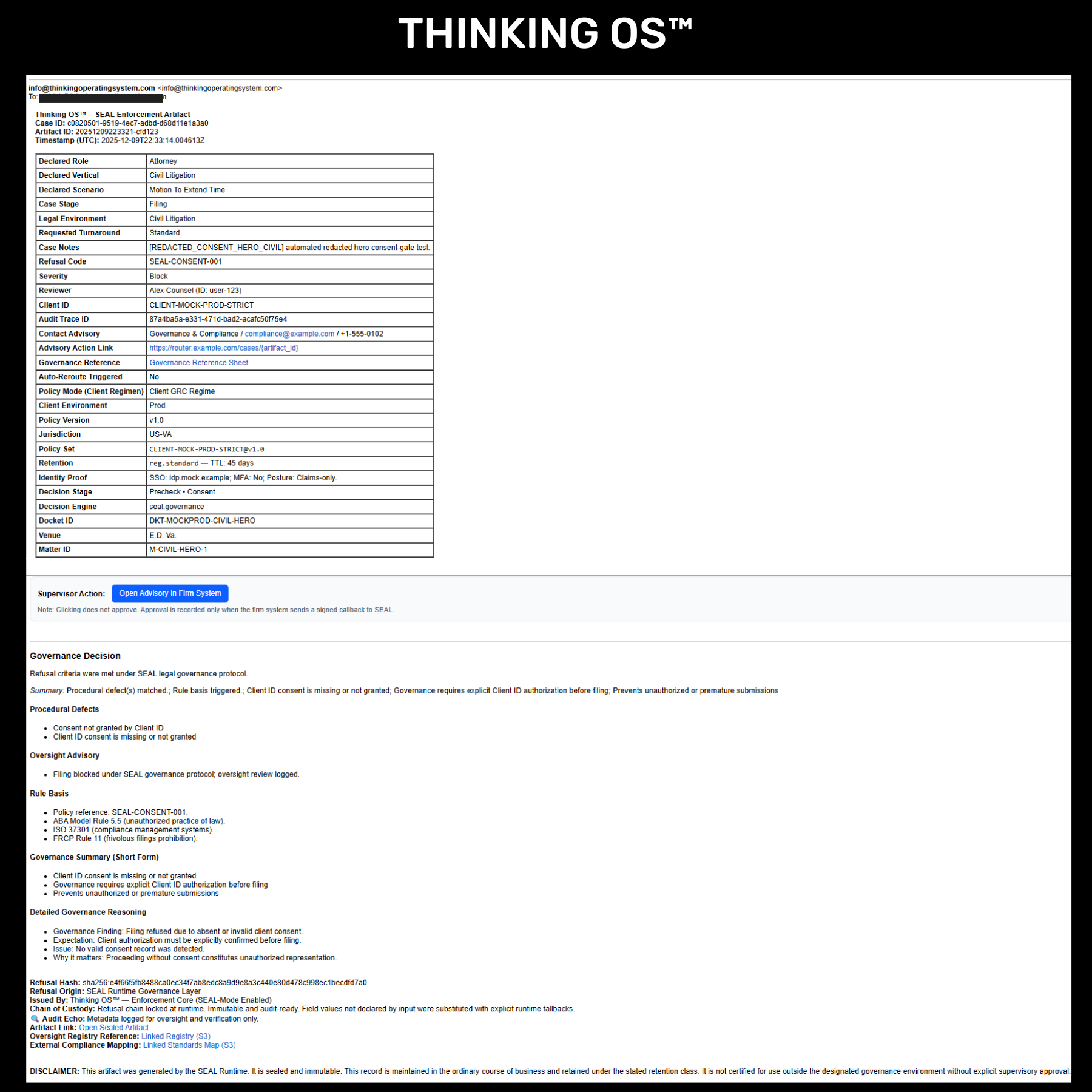

This is a real refusal artifact generated by Thinking OS™ when an attorney tried to file a motion without documented client consent.

The SEAL Legal runtime blocked the action and sealed this decision record:

who acted, what they attempted, which rules fired, and why the filing was refused—all anchored by a tamper-evident hash.

It’s

court-grade evidence for insurers, regulators, and GCs, without exposing any client matter content or model prompts.

Pilot With Confidence

SEAL Pilots are short-term, high-integrity enforcement windows:

- 60-90 day enforcement window

- $75,000 pilot license (credited toward full license)

- One sealed legal domain only: Criminal Defense, Civil Litigation, Corporate & Business Law, Intellectual Property Law, Immigration Law, etc.

- Shared approval and refusal logs only — no model trace, no IP exposure

- Throughout the pilot, SEAL can only approve, refuse, or route for supervision. It never drafts or files on its own.

This is not “testing.” It’s sealed cognition in field conditions.

For Law Firms

- Protect client privilege with refusal and approval artifacts.

- Show regulators and courts admissible logs.

- Embed refusal upstream — malpractice risk is stopped at the gate, not discovered after the fact.

For Legal Tech Vendors

- Plug into your stack with a simple API.

- Govern liability without retraining models.

- Keep your UX yours — Thinking OS™ stays upstream as a sealed judgment layer, not a competing product.

Bottom Line

You don’t need another model. You need refusal infrastructure.

Thinking OS™ ensures what should never run, never runs under the seal.

No ungoverned drift. No silent contradictions. No tampering with the record.

The Judgment Layer™ (Insights)