You Gave Your AI Agents Roles. But Did You Give Them Rules?

Your Stack Has Agents.

Your Strategy Doesn’t Have Judgment.

On paper, most modern stacks now look impressive:

- Agents assigned to departments

- Roles mapped to workflows

- Tools chained through orchestrators

But underneath the diagrams, one layer is still missing:

A structural pre-execuction authority gate that decides which actions are even allowed to execute.

Because

role ≠ rules.

And

execution ≠ judgment.

Most agent architectures assume the logic is sound as long as:

- the right persona is active, and

- the right tools are wired in.

What almost nobody asks is:

“Should this action be allowed to run at all, given this actor, this context, and this authority?”

What Happens When Two Agents Collide?

Your Growth agent spins up a campaign.

Your Legal agent raises a constraint.

Your Compliance agent flags a risk.

Now what?

- Which one halts the system?

- Who decides whether the action can proceed anyway?

- Where is the layer that arbitrates execution rights, not just opinions?

It’s not in the orchestrator.

It’s not in the prompt.

It’s not in a “fallback to human” comment in the spec.

You gave your agents

roles.

You never

installed the layer that enforces

rules under pressure.

Execution Should Never Outrun Authority

Here’s what’s already happening in real stacks:

- A plugin is called that was never cleared for regulated data.

- An agent loops into a sequence that quietly crosses budget or risk limits.

- An LLM “helpfully” triggers an API that creates, deletes, or files something for real.

- A plausible-sounding rationale makes it all the way into a client-facing action with no record of who allowed it.

You didn’t “fail at AI.”

You skipped the part where

actions are gated by authority, not just availability.

Thinking OS™ Doesn’t Tell Agents What to Say.

It Decides What They’re Allowed to Do.

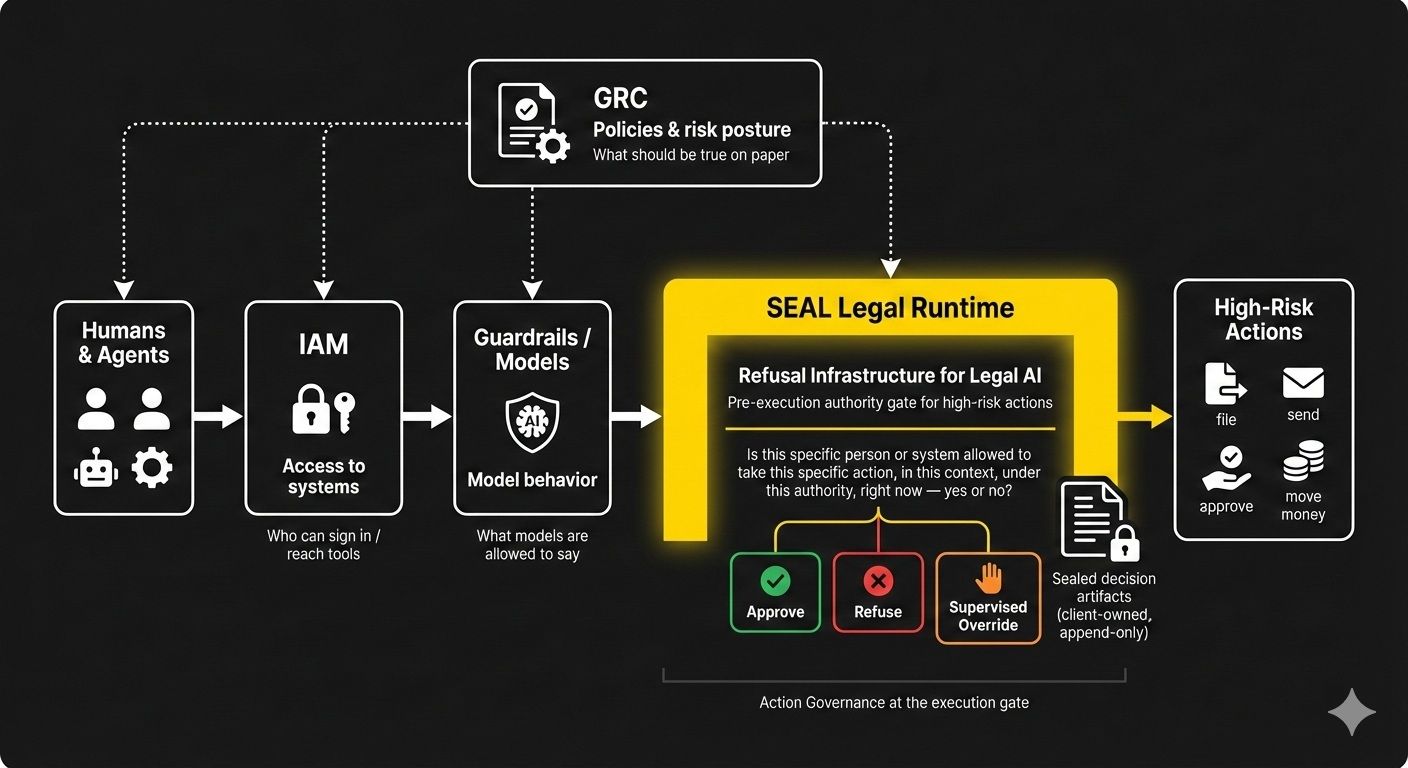

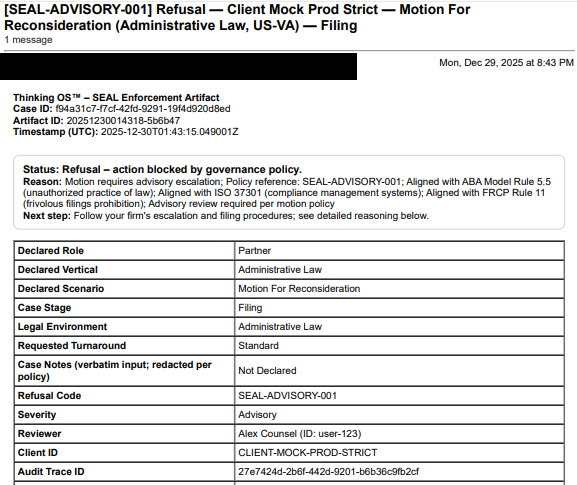

Thinking OS™ provides Refusal Infrastructure for Regulated Industries — a sealed governance layer in front of high-risk actions.

Agents, tools, and humans can propose as much as they like.

But when it comes time to:

- file,

- send,

- approve, or

- move something that matters,

those steps must pass through SEAL Runtime, a pre-execution authority gate wired into governed workflows.

For each governed attempt, the gate asks:

“Given this actor, this matter, this venue, this consent and authority state —

may this action run at all: allow / refuse / supervise?”

If the answer is no, the action does not execute.

And either way, a

sealed decision artifact is written for audit, regulators, and internal oversight.

This Is the Real Aha Moment

You’re not just “scaling agents.”

You’re scaling

execution.

Without a pre-execution gate, you’re effectively saying:

- “If the agent can reach the tool, it can act.”

What you actually need is infrastructure that can say:

- ⛔ “This action is out of scope for this role, in this context — refused.”

- ✅ “This action is permitted under current policy and authority — proceed.”

- 🔁 “This action requires supervision — route to a named human decision-maker.”

That’s not safety theater.

That’s

Action Governance.

The Teams Moving Fastest Now Realize:

- Execution is cheap. Licensed authority is rare.

- Agent roles are visible. The real rules are invisible unless enforced.

- AI doesn’t just need instructions. It needs a gate between “thought” and “irrevocable action.”

So the only question that really matters is:

What governs your agents before they take binding actions in your name?

If the answer is a policy PDF or a hopeful prompt, you don’t have governance.

You have wishes.

Refusal Infrastructure — and SEAL Legal Runtime in particular — exists so your agents can move fast inside

a boundary where judgment is enforced, not implied.