Thinking OS™ Could Replace Half of What AI Policy Is Trying to Do

What if AI governance didn’t need to catch systems after they moved — because a pre-execution gate refused high-risk actions that never should have run in the first place?

That’s not metaphor. That’s the purpose of Thinking OS™ — Refusal Infrastructure for Legal AI — a sealed governance layer in front of high-risk legal actions.

Not by writing new rules.

Not by aligning LLMs.

But by enforcing

who may do what, under which authority, at the moment of action.

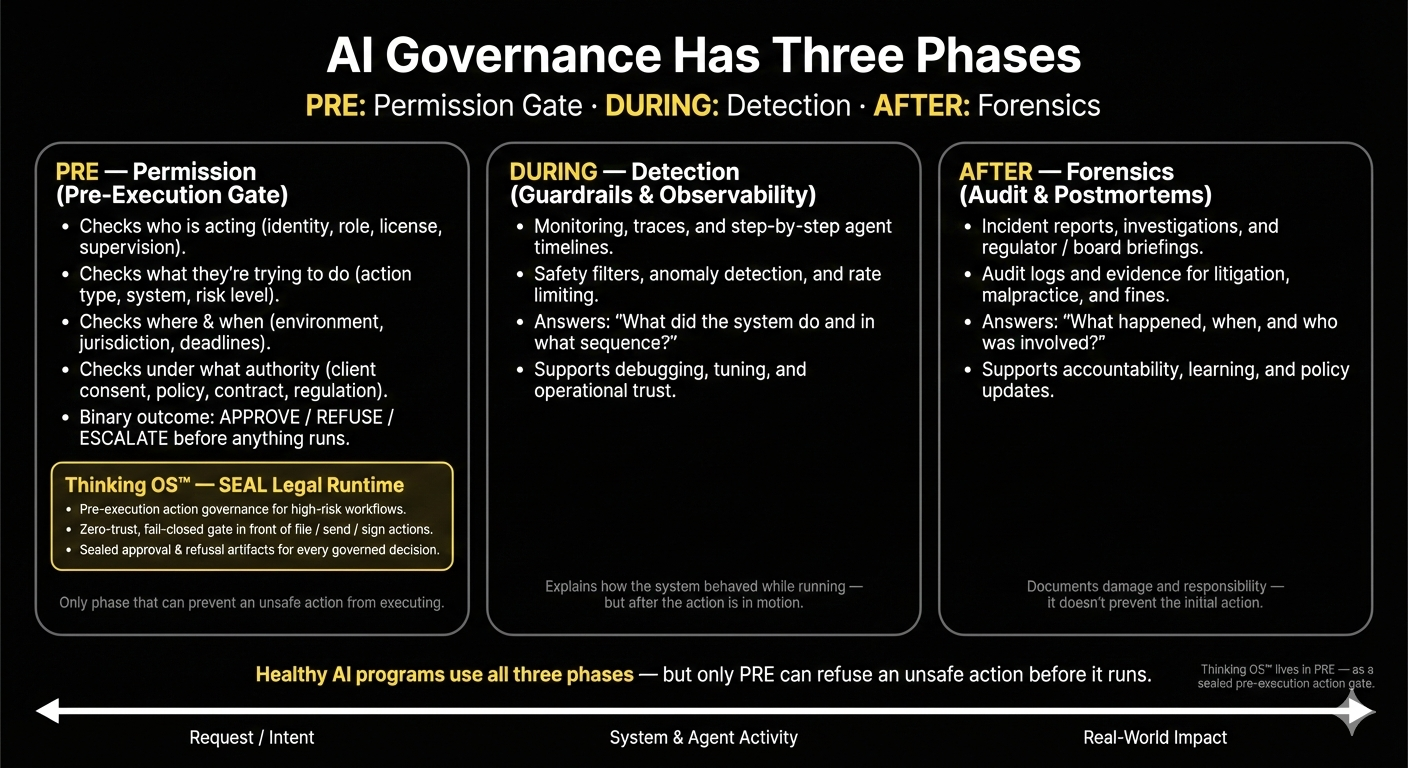

Governance Doesn’t Scale When It’s Only Downstream

Most AI policy frameworks today govern after the fact:

- We red-team emergent behavior

- We score bias in generated output

- We bolt compliance review pipelines onto existing workflows

None of that stops a system from:

- filing something it shouldn’t,

- sending something that wasn’t cleared, or

- approving something outside delegated authority.

It doesn’t scale past heroic supervision, and it doesn’t make AI obey. It just asks it to explain itself later.

Refusal Logic Is Not a Preference — It’s a Precondition

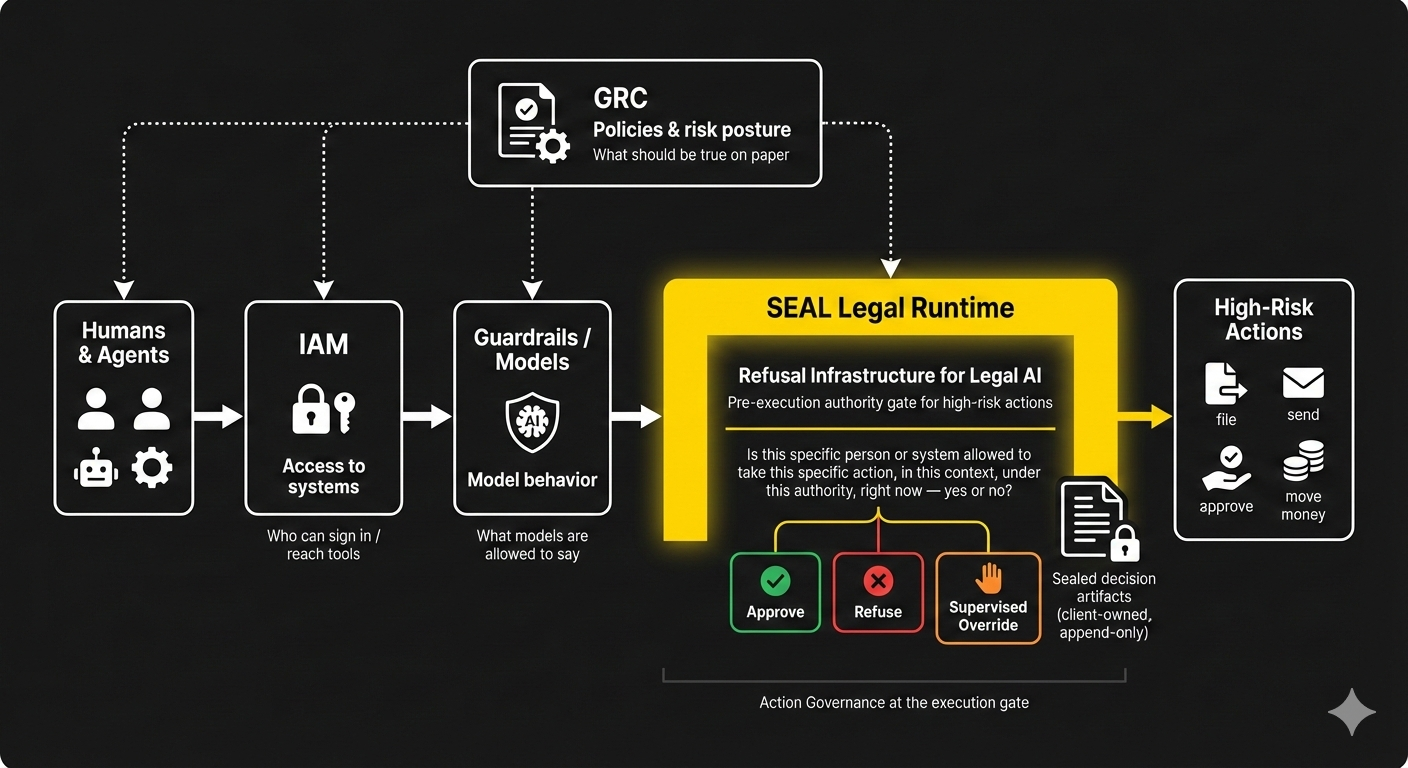

Thinking OS™ operates

in front of high-risk actions as a refusal-first governance architecture.

The discipline behind it is Action Governance:

For each wired step — file, send, approve, move money —

“Given this actor, this matter, this venue, this consent state, may this action run at all: allow / refuse / supervise?”

The core behavior is simple:

- If identity, scope, authority, or consent don’t resolve, the action does not execute.

- If everything is in bounds, tools proceed as they do today.

- Either way, the decision is written into a sealed, tamper-evident artifact the client owns.

This isn’t alignment by fine-tuning.

This is governance by

structural veto at the execution edge.

AI Policy Writes Rules. Refusal Infrastructure Executes Them.

Regulators are drafting the next wave of AI requirements:

- Explainability standards

- Risk tiers and obligations

- Data and model disclosures

- Governance and documentation expectations

Even when they’re strong, most of these assume:

- vendors will cooperate, and

- organizations have some way to turn written policies into live constraints on what systems are allowed to do.

Thinking OS™ doesn’t assume. It enforces.

- Roles and authorities come from your IdP and governance systems.

- High-risk actions in wired workflows must pass through SEAL Legal Runtime, the pre-execution gate.

- Every decision — approve, refuse, supervised override — produces a client-owned artifact that maps directly to your policy regime.

Policy defines the “should.”

Refusal infrastructure decides, in real time, whether a given action earns the right to happen.

Law, Now Embedded

Refusal architecture changes where “law” actually lives in the stack.

Governance stops being:

- a PDF next to a deployment, or

- a slide in an audit deck,

and becomes:

- compiled authority boundaries that execute at runtime,

- directly in front of the “file / send / approve” buttons that matter.

If an action cannot cross the gate without licensed authority:

- malformed or out-of-scope reasoning can’t silently turn into a filing,

- unlicensed agents can’t quietly send “just one more” client communication,

- approvals can’t creep past delegated limits without leaving a trail.

You’re not preventing models from ever making bad suggestions.

You’re preventing those suggestions from

turning into binding actions without judgment on the record.

The Stack Shift Is Structural

Thinking OS™ doesn’t compete with OpenAI, Anthropic, or your favorite vendor tools.

It governs what their outputs are allowed to become inside legal workflows.

- Models, agents, and tools can propose options.

- Your people still decide strategy and substance.

- SEAL Legal Runtime decides whether the resulting action is allowed to execute in your systems at all — and proves it.

In that world, policy is no longer the “top layer.”

Refusal at the action boundary is.

The future of AI governance is less about more checklists, and more about

who owns NO + the evidence, wired directly into the stack.

For Legal, Enterprise, and National Governance Leaders:

If your AI oversight does not include a pre-execution authority gate with durable decision artifacts, it is structurally incomplete.

No enforcement that only happens after execution is:

- fast enough,

- safe enough, or

- defensible enough

for environments where filings, funds, or regulatory records are on the line.

Thinking OS™ isn’t here to interpret the law for you.

It’s here to

embed your law — your policies, your roles, your authorities — at the point where actions are taken in your name.

Let regulators write policy.

Let vendors build tools.

Refusal infrastructure is the layer that refuses what should never have run — and proves it did.